Generative AI based on large language models (LLMs) has become a valuable tool for individuals and businesses, but also cybercriminals. Its ability to process large amounts of data and quickly generate results has contributed to its widespread adoption. AI in the hands of cybercriminals According to a report from Abnormal Security, generative AI (GenAI) is […]

As large language models (LLMs) become more prevalent, a comprehensive understanding of the LLM threat landscape remains elusive. But this uncertainty doesn’t mean progress should grind to a halt: Exploring AI is essential to staying competitive, meaning CISOs are under intense pressure to understand and address emerging AI threats. While the AI threat landscape changes […]

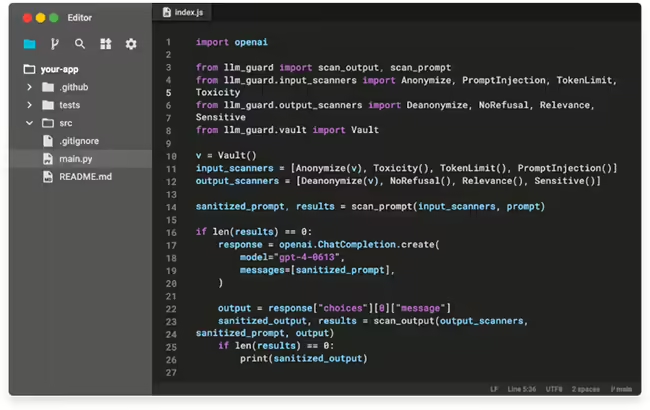

LLM Guard is a toolkit designed to fortify the security of Large Language Models (LLMs). It is designed for easy integration and deployment in production environments. It provides extensive evaluators for both inputs and outputs of LLMs, offering sanitization, detection of harmful language and data leakage, and prevention against prompt injection and jailbreak attacks. LLM […]