The financially motivated threat actor FIN7 targeted a large U.S. car maker with spear-phishing emails for employees in the IT department to infect systems with the Anunak backdoor.

Mar 13, 2024NewsroomLarge Language Model / AI Security Google’s Gemini large language model (LLM) is susceptible to security threats that could cause it to divulge system prompts, generate harmful content, and carry out indirect injection attacks. The findings come from HiddenLayer, which said the issues impact consumers using Gemini Advanced with Google Workspace as well […]

Generative AI based on large language models (LLMs) has become a valuable tool for individuals and businesses, but also cybercriminals. Its ability to process large amounts of data and quickly generate results has contributed to its widespread adoption. AI in the hands of cybercriminals According to a report from Abnormal Security, generative AI (GenAI) is […]

3rd Party Risk Management , Application Security , Governance & Risk Management HHS: Compromise at Large Pharma Software and Services Firm Puts Entities at Risk Marianne Kolbasuk McGee (HealthInfoSec) • January 25, 2024 Federal authorities warn that a self-hosted version of remote access product ScreenConnect from ConnectWise was compromised in 2023 at a […]

As large language models (LLMs) become more prevalent, a comprehensive understanding of the LLM threat landscape remains elusive. But this uncertainty doesn’t mean progress should grind to a halt: Exploring AI is essential to staying competitive, meaning CISOs are under intense pressure to understand and address emerging AI threats. While the AI threat landscape changes […]

The DNA testing company 23andMe is investigating whether a large trove of customer data was stolen from the company after information about the firm’s clients was offered for sale on a cybercrime forum earlier this week. On Sunday, a post on a popular forum where stolen data is traded and sold claimed to have “the […]

Stream-jacking attacks have gained significant traction on large streaming services in recent months, with cybercriminals targeting high-profile accounts (with a large follower count) to send their fraudulent ‘messages’ across to the masses. Starting from the fact that various takeovers in the past resulted in channels morphing into impersonations of known public figures (e.g. Elon Musk, […]

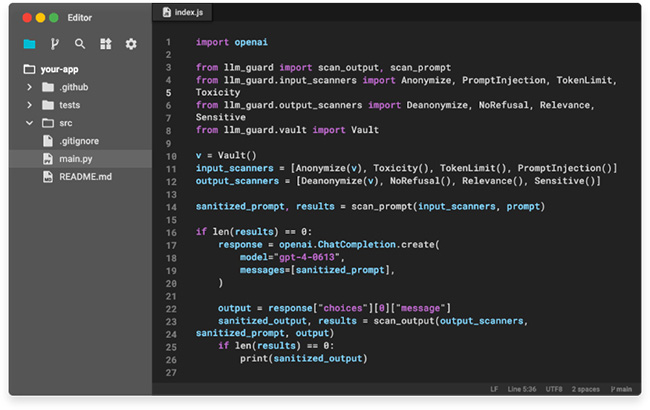

LLM Guard is a toolkit designed to fortify the security of Large Language Models (LLMs). It is designed for easy integration and deployment in production environments. It provides extensive evaluators for both inputs and outputs of LLMs, offering sanitization, detection of harmful language and data leakage, and prevention against prompt injection and jailbreak attacks. LLM […]