This attack has been able to extract the entire projection matrix of OpenAI’s language models for a cost ranging from a few dollars to several thousand, depending on the model’s size and the number of queries.

Mar 13, 2024NewsroomLarge Language Model / AI Security Google’s Gemini large language model (LLM) is susceptible to security threats that could cause it to divulge system prompts, generate harmful content, and carry out indirect injection attacks. The findings come from HiddenLayer, which said the issues impact consumers using Gemini Advanced with Google Workspace as well […]

Generative AI based on large language models (LLMs) has become a valuable tool for individuals and businesses, but also cybercriminals. Its ability to process large amounts of data and quickly generate results has contributed to its widespread adoption. AI in the hands of cybercriminals According to a report from Abnormal Security, generative AI (GenAI) is […]

Data serialization languages, like Extensible Markup Language and YAML Ain’t Markup Language, are typically found in infrastructure-as-code management software. Understand the differences and use cases between XML and YMAL to maximize your automation potential in application development. XML and YAML provide administrators with many options to automate and structure data. However, knowing the differences enables […]

As large language models (LLMs) become more prevalent, a comprehensive understanding of the LLM threat landscape remains elusive. But this uncertainty doesn’t mean progress should grind to a halt: Exploring AI is essential to staying competitive, meaning CISOs are under intense pressure to understand and address emerging AI threats. While the AI threat landscape changes […]

Prompt injection is, thus far, an unresolved challenge that poses a significant threat to Language Model (LLM) integrity. This risk is particularly alarming when LLMs are turned into agents that interact directly with the external world, utilizing tools to fetch data or execute actions. Malicious actors can leverage prompt injection techniques to generate unintended and […]

The ZeroFont phishing technique exploits flaws in AI and natural language processing systems to insert hidden words or characters in emails, evading security filters and tricking recipients.

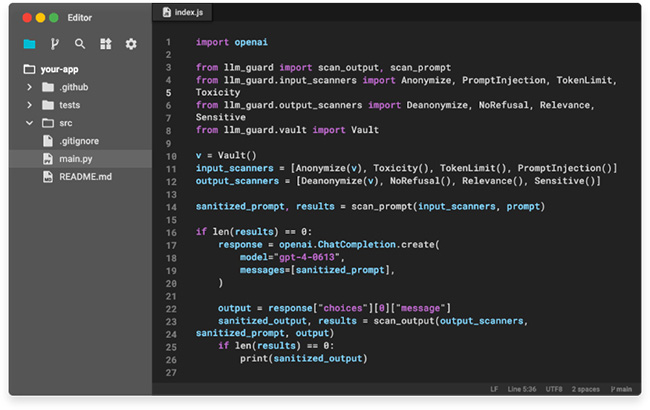

LLM Guard is a toolkit designed to fortify the security of Large Language Models (LLMs). It is designed for easy integration and deployment in production environments. It provides extensive evaluators for both inputs and outputs of LLMs, offering sanitization, detection of harmful language and data leakage, and prevention against prompt injection and jailbreak attacks. LLM […]